- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Interpolate Pixel

04-29-2024 06:25 AM - edited 04-29-2024 06:31 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@Andrey_Dmitriev wrote:

@paul_a_cardinale wrote:

So my new problem is:

- Apply the distortion...

May be OpenCV Remap will help you in this case (as an idea). I've used this in the past for classical Lens Distortion correction (based on dot pattern grid), it works.

Today on my lunch break, I created a simple demo showing how distortion can be applied. I know you're on a Mac, and Windows-based code is almost useless for you, but this is just an idea. OpenCV can be used on a Mac, and in the worst case, you can take a look into cvRemap and re-implement this in LabVIEW.

Let's say we have a distorted image and an accompanying Grid Pattern, which is used for correction:

NI Vision has a couple of functions for geometrical calibration, but they don't do remapping. This is not necessary in general, because almost all all measurement functions can use the geometrical calibration information and work on real-world coordinates. However, if we want to perform optical distortion correction, then we just need to prepare X-Y maps of the "translation" from the distorted to the "undistorted" images and pass this information to the OpenCV remap function, something like that (magic constant 30/60 will just shift and center image):

C Source Code:

#include "opencv2/imgproc.hpp"

#include "vision/nivision.h"

using namespace cv;

typedef uintptr_t NIImageHandle;

//LabVIEW Array of Clusters:

typedef struct {

float X;

float Y;

} TD2;

typedef struct {

int32_t dimSize;

TD2 elt[1];

} TD1;

typedef TD1** TD1Hdl;

extern "C" int LV_SetThreadCore(int NumThreads);

extern "C" int LV_LVDTToGRImage(NIImageHandle niImageHandle, void* image);

extern "C" __declspec(dllexport) int Remap(NIImageHandle SrcImage, NIImageHandle DstImage, TD1Hdl XYmap)

{

Image *ImgSrc, *ImgDst;

unsigned char* LVImagePtrSrcU8, * LVImagePtrDstU8;

int LVWidth, LVHeight;

int LVLineWidthSrc, LVLineWidthDst;

if (!SrcImage || !DstImage ) return ERR_NOT_IMAGE;

LV_SetThreadCore(1); //must be called prior to LV_LVDTToGRImage

LV_LVDTToGRImage(SrcImage, &ImgSrc);

LV_LVDTToGRImage(DstImage, &ImgDst);

if (!ImgSrc || !ImgDst) return ERR_NOT_IMAGE;

LVWidth = ((ImageInfo*)ImgSrc)->xRes;

LVHeight = ((ImageInfo*)ImgSrc)->yRes;

imaqSetImageSize(ImgDst, LVWidth, LVHeight);

LVLineWidthsrc=((ImageInfo*)ImgSrc)->pixelsPerLine;

LVLineWidthDst = ((ImageInfo*)ImgDst)->pixelsPerLine;

LVImagePtrSrcU8 = (unsigned char*)((ImageInfo*)ImgSrc)->imageStart;

LVImagePtrDstU8 = (unsigned char*)((ImageInfo*)ImgDst)->imageStart;

if (!LVImagePtrSrcU8 || !LVImagePtrDstU8 ) return ERR_NULL_POINTER;

Size size(LVWidth, LVHeight);

Mat src(size, CV_8U, LVImagePtrSrcU8, LVLineWidthSrc);

Mat dst(size, CV_8U, LVImagePtrDstU8, LVLineWidthDst);

Mat map_x(src.size(), CV_32FC1);

Mat map_y(src.size(), CV_32FC1);

int rows = LVHeight; //amount of rows (map height)

int cols = LVWidth; //amount of columns (map width)

for (int row = 0; row < map_x.rows; row++) { //height, y

for (int col = 0; col < map_x.cols; col++) { //width, x

map_x.at<float>(row, col) = (float)((*XYmap)->elt)[col + row * map_x.cols].X;

map_y.at<float>(row, col) = (float)((*XYmap)->elt)[col + row * map_x.cols].Y;

}

}

//OpenCV Remap:

remap(src, dst, map_x, map_y, INTER_LINEAR, BORDER_CONSTANT, Scalar(255));

return 0;

}

I'll just leave complete code here — LV_OpenCV_RemapDemo, may be will be useful for someone who will read this topic in a future.

04-29-2024 06:56 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@altenbach wrote:

wiebe@CARYA wrote:

It's still not clear to me what the goal is.

The original VI returned a single interpolated pixel.

The focus now seems to be on interpolating an entire image.

There are overlapping use cases, but also very different use cases.

The subVI interpolates exactly one pixel as in the original question, but that would be relatively hard to test because it is so fast and an isolated first call possibly has more overhead.

The outer shell code is just a benchmark harness to see how it performs on numerous pixels, i.e. repeated calls differing in inputs. It also verifies that the result looks approximately correct.

(But yes, to expand an entire picture, there are also other possibilities, of course)

Seems to focus on converting the entire image again though....

04-29-2024 12:11 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

wiebe@CARYA wrote:

Wouldn't the U8 overflow?

Adding 4 U8 will only fit an U8 if the input U8's are 6 bit...

If the value really is an U8, you could make a real LUT, using the U8 as an index in the table. Interpolating is expensive... Even a LUT with an U16 index will be fast, but an I32 will be impractical.

It's messed up. There are also some edge cases that could break it. Each of the 4 values should fit into 18 bits (but I could shrink that to 16).

04-29-2024 05:53 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@paul_a_cardinale wrote:

It's messed up. There are also some edge cases that could break it. Each of the 4 values should fit into 18 bits (but I could shrink that to 16).

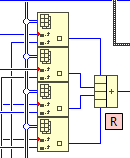

I was wrong about the edge cases, and the bit width. But I wasn't wrong about it being messed up. Here's the fixed version (the 2D array is I64).

04-29-2024 08:04 PM - edited 04-29-2024 08:10 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Here's the ticket. Plenty fast, even when accounting for gamma. Now I don't need a separate case when using gamma = 1 (so it's no longer a .vim).

04-29-2024 11:39 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@paul_a_cardinale wrote:

Here's the ticket. Plenty fast, even when accounting for gamma. Now I don't need a separate case when using gamma = 1 (so it's no longer a .vim).

That was exactly my "feeling" that it is possible to keep simple integer arithmetic at the end. I tried to do it in Threshold1D, but the precision loss was too large. Now you have done it.This time I won't bother you with a library-based version, as the expected speedup factor "LabVIEW code vs SharedLib" is not dramatically high — I'll expect around 1.5-2 times (it was tested in the case of gamma=1 somewhere above).However, I don't think the result will be mathematically equal to the previous version. For small gammas, it may be, but for larger values, it will be slightly different, I guess.

04-30-2024 03:41 AM - edited 04-30-2024 04:08 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@paul_a_cardinale wrote:

Here's the ticket. Plenty fast, even when accounting for gamma. Now I don't need a separate case when using gamma = 1 (so it's no longer a .vim).

That looks more like a proper LUT (or two actually).

You might gain even more speed by making the 2nd LUT a U32, and storing R, G, B in it, at the right position. Then you don't need to join the 4 U8s, you can or the 3 U32s. It might be faster, could be slower too (it's join 4 u8s +2 U16s vs 3 or U32).

I'd personally make this a class and store the LUTs in the private data. Being careful no to create class copies of course. But that way you can more easily have more than 1 gamma without the gamma being recalculated because it changed.

But I am strongly biased against VIs with side effects, such as references and state.

04-30-2024 09:33 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

How does it fare if it's all Singles instead of Doubles?

04-30-2024 11:14 AM - edited 04-30-2024 11:21 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@Yamaeda wrote:

How does it fare if it's all Singles instead of Doubles?

Usually singles are faster than doubles, but in most cases of large arrays (because of memory access, cache, etc). It is quite easy to demonstrate:

In this particular case don't think that this will improve much, moreover, you will be surprised — SGL in my test (2023 and 2024 64-bit) is roughly twice slower:

But in LabVIEW 2018, 2023 and 2024 32-bit almost the same speed:

So, it depends and needs be checked in each individual case, on the Mac or Linux situation could completely different.

04-30-2024 07:07 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@Yamaeda wrote:

How does it fare if it's all Singles instead of Doubles?

Probably most floating point math processors always use the widest format they have*; smaller formats save space, but cost time to convert.

* I know that the Intel x87 series always did calculations in 80-bit format.