- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Timed delayed message

01-21-2015 02:57 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Suggestion: Oversample, include a timestamp with each data point, then at the data collection end, either use XY plotting & analysis or resample to create evently spaced data. Resampling also provides an opportunity to filter.

01-21-2015 03:27 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

powell: How does message sending have anything to do with regularly spaced data? There's no data payload in these messages except whatever fixed payload was put into the original copy. The spacing of data will be based on time received (assuming the heartbeat is a "please send more data") and we have already established that there will be a fair amount of drif on the receiving end.

01-21-2015 03:50 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hey AQ,

I am planning on sending a more thoughtful response, but I thought I might go ahead and reply to this.

James and I seem to be on the same wavelength (somehow), but I think that you might be in a different space. He hit the explanation dead on - we don't care about the jitter that is introduced in something that might provide a low frequency, long term product (like data from some type of controller). All we might want is to ensure that the product is freshened at some point during the predefined update interval (feel free to use the term "freshened product" in your next presentation, just make sure I get the proper acknowledgement...) and that the bounds of the interval do not drift. These bounds would be defined by the regular message that is sent at the time of sending, regardless of reception or processing.

It is true, you could imagine a scenario where something hangs up and there is a delay in the processing such that two messages might be processed in a single interval (with one being skipped). But, the fact is that the message sending will keep plugging away so you are likely (unless you don't understand the computational requirements) to get back in sync at some with the intended period. With the current method, there is no well defined relationship between the sending of messages, so you will never be related to any period regardless of intention.

RM - as I said before, oversampling might not be necessary or even desirable. Consider something that you are communicating with that provides filtered output - oversampling provides you nothing in this instance. But, you can take a significant resource hit if you are poling that device more often than necessary.

01-21-2015 05:03 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi AQ, Are we using the same definition of "drift"? Syncing your messages to the millisecond timer means there is no drift. The time to receive and process your message indroduces a delay (and jitter in that delay) but this does not accumulate into drift. This is why the “Wait on Next ms Multiple” exists in LabVIEW.

01-23-2015 01:04 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

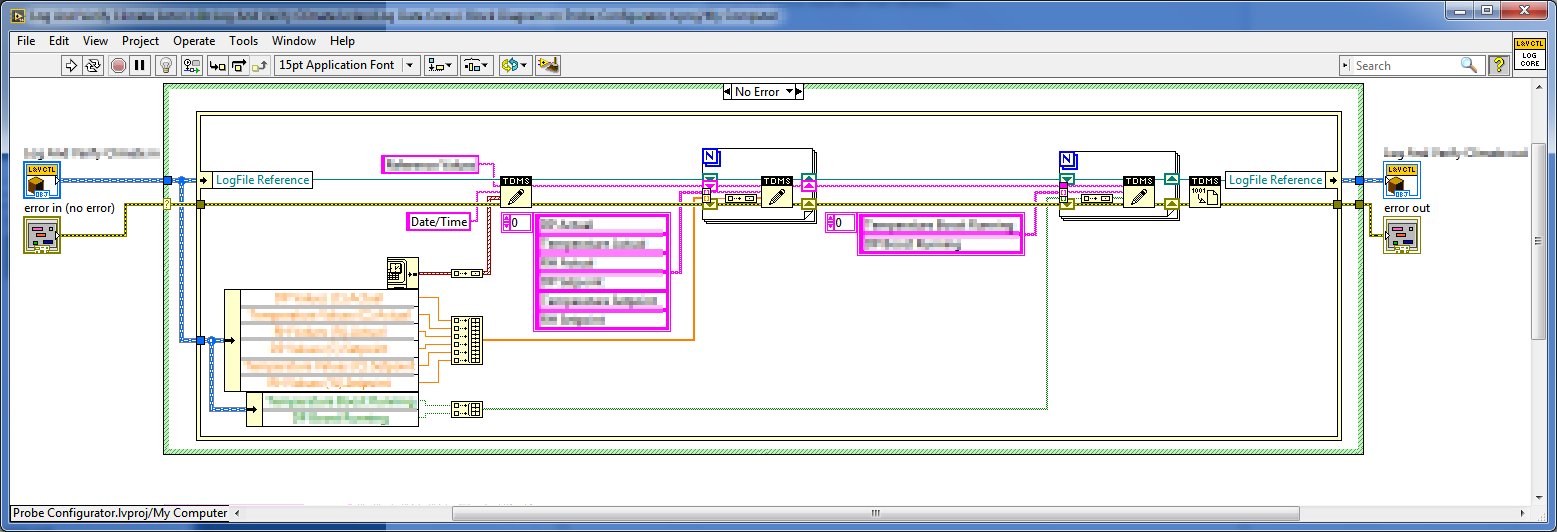

Well, while this discussion was going on I had a AF project running that logged the values last night.

I removed all the data exept for the timestamp.

The data:

https://drive.google.com/file/d/0B-2EkjWE5Pr9SEI2MXJWMHhaQ3M/view?usp=sharing

The code (Labview 2014.0f1, uncompiled):

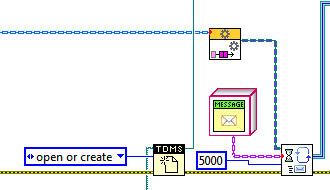

Init:

Logging code:

From what I can tell there is a (little) drift but I don't have time to process the data more.

Hope it helps in the discussion.

01-23-2015 10:16 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Powell: I'll ask you the same question I asked mtat76: So what if there is drift? Can you name me any application where the receiver is non-deterministic but drift matters? If so, in that application, what is the negative of a missed message in any given interval? I cannot posit any application in which BOTH non-deterministic communication exists AND a few milliseconds worth of drift accumulating over intervals matters to the application's correctness.

To put it another way-- every actual use of this function I've seen, the time period has been wired with a hand wavy number. "Hm... I want to update this oh.... every, let's say, 500 ms. Yeah. Let's go with that." It is a rough estimate number and if it is roughly right, that's more than correct behavior. There just isn't a use case for using it with precision that I know of -- making it non-drifty doesn't seem to buy anything.

Where could you use a non-drifty version and actually get benefit?

01-23-2015 10:18 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hey Tim,

Thanks for putting in the effort on this. One thing that I notice is that delay is set to 5 s. The definition of little will often be relative to the period of interest. Remember that the drift will likely always be the same given that the message sending code is always the same. So, if the drift is 5 ms, this might not seem like a lot for a period of 5 s, but for shorter periods, this could be quite significant.

And anyway, drift is drift. And the drift will continue to accumulate regardless of how large it is. The question is - is that desirable or even expected.

AQ, I have been putting some thought into this and will have more profound comments a little later (actual work has distracted me from this engaging discussion).

Cheers, Matt

01-25-2015 04:47 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi AQ, I see what your saying. It can’t really matter that much when the message is sent, because you can’t ensure when it is handled. But there is (minor) benefit to taking data where you know there is no drift over time (even if individual readings have jitter), and making a component that doesn’t have drift is not complicated. There is also the principle of least astonishment: a periodic message sender should be periodic.

01-25-2015 11:51 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Arrrrggggghhhh! This statement

AristosQueue wrote:

I cannot posit any application in which BOTH non-deterministic communication exists AND a few milliseconds worth of drift accumulating over intervals matters to the application's correctness.

is aggravating! There seems to be a real disconnect between this statement and my statements above. I don't know how to make this any clearer. Let's just go ahead and use a Windows application as an example - this will NEVER be determinstic. Does that mean that you don't want things to occur at regular intervals (i.e. no accumulating drift)? Absolutely not, but you have to understand that those updates come with jitter.

I think the statement in the above comment hits at something that seems to be missing in the discussion:

drjdpowell wrote:

But there is (minor) benefit to taking data where you know there is no drift over time (even if individual readings have jitter)

The word that seems to be missing is data. We talk about "messages" and "functions" above as if they are are the sole object of a LabVIEW application. But the reality is that many of us (most?) use LabVIEW to acquire some kind of signal (i.e. data). And the actual acquisition of that signal often comes with some indeterminate period that is defined by a whole chain of events that may not be easily defined (code execution is just one component).

In the case which I was intending to use this, I have an actor that is a wrapper for different devices. The actor may be part of an RT system or a Window's based system, but regardless of the platform, many instances of this actor are launched to wrap up functionality of these different devices. Now, each of these devices produces a data product that is used by the rest of the system. And each of these devices may accept input that might affect the behavior of the acquisition of that data. The system expects data from these devices to establish the physical conditions of the system at regular intervals (1 Hz is usually sufficient); stale data might result in incorrect in situ data processing or bad decisions made regarding the control of the overall system state.

Now, some of these devices are serial and communication with them will never be "deterministic" in the traditional sense - maybe sometimes it takes 25 ms to retrieve the data product, maybe sometimes 27; maybe there is another device talking on the line and it will have to wait 50 ms, I don't know. Regardless, none of these problems results in accumulating drift as it does with the way the timed delayed send message VI does. That is because each time a new message is sent, all of the actions taken to retrieve the data have a fresh start. If the start line consistently moves, then we now have a non-random component that we have to account for in the way we set the message up. But, regardless of how we set the message up, in the current operation, we have already ensured that we will never get regular behavior as there will always be drift and therefore the start line is always guaranteed to move.

Is the use of the actor in this case correct? I guess that is open for debate, but surely there are similar instances where you wish to have a regular message delivered and drift does matter. And as the above comment goes

drjdpowell wrote:

a periodic message sender should be periodic.

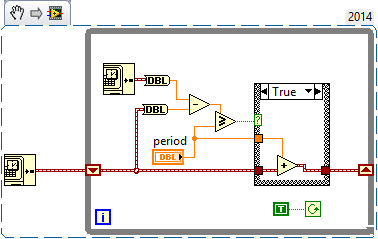

The following loop took me less than 2 minutes to code and should be stable for...ever?

With a little more effort, we can even ensure that this will be more reliable for smaller time scales. So why does it seem like the current way of doing it is worthy of so much defense when there is a way that is easy to implement and straight forward to achieve periodic behavior?

I think we can call this complete - the way this was coded was because there was no forseen need to ensure periodic behavior and the wait for the notifier was the fastest way to code the VI (the answer to the original question). I hope that the discussion convinces everyone of two things - that some might desire to send messages guaranteed periodic and that it really isn't that difficult to do...

Thanks for the discussion AQ. This has been insightful.

01-26-2015 03:58 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

mtat76 wrote:

The following loop took me less than 2 minutes to code and should be stable for...ever?

I haven't read the thread too closely, but I can see one big difference between this and the current implementation - this is polling and the current VI isn't, which I'm assuming was the main consideration behind the current design.

If you make it non-polling, you have to account for the drift in code somehow if you really want the data to be sent at regular intervals, as there is currently no non-polling mechanism (at least none that I can think of) which can be configured to act at regular intervals.

___________________

Try to take over the world!