- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Interpolate Pixel

04-18-2024 01:06 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@Andrey_Dmitriev wrote:

@paul_a_cardinale wrote:

To be extra fancy, it should take into account gamma (that would probably slow it down to a crawl).

Interesting, what exactly do you mean? I haven't much experience with color images, so my idea could be wrong, but probably 2D interpolation in color mode other than RGB will help, like in HSL?

In the human visual system, perceived brightness is approximately proportional to the cube root of light intensity. In order to make the best use of the available dynamic range, digital cameras use a non-linear transfer function. Probably each brand has its own proprietary function; but they should roughly approximate the human visual system. Thus, with an 8-bit value, 127 should represent a light level that looks half as bright as the light level represented by 255; which is to say a light level of 1/8 as much as the 255 light level. Many image editing programs (even expensive ones) don't take this into account, with the effect that downscaling an image causes it to darken. For example, the average of black (0) and white (255) should be 202, not 127.

04-18-2024 01:22 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I always thought that the display devices take care of gamma correction, but this is definitely not my field of expertise. 😄

(Most monitors have available gamma adjustment. Same for windows itself.)

04-18-2024 01:49 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@altenbach wrote:

I always thought that the display devices take care of gamma correction, but this is definitely not my field of expertise. 😄

(Most monitors have available gamma adjustment. Same for windows itself.)

Yes, they do. So a display will present a value of 127 with about 1/8 of the light level of a value of 255.

The problem is that when you blend pixels, you want to blend light levels. But the numeric values are not proportional to the light levels. So just blending the numbers gives inaccurate results.

04-18-2024 01:49 PM - edited 04-18-2024 01:51 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@paul_a_cardinale wrote:

In the human visual system, perceived brightness is approximately proportional to the cube root of light intensity. In order to make the best use of the available dynamic range, digital cameras use a non-linear transfer function.

Thank you very much! In general, we use medical grayscale monitors for viewing industrial x-ray images in DICOM mode, and these monitors should meet the dedicated Grayscale Standard Display Function, which dictates the Luminance (measured in cd/m²) that shall be emitted at each Digital Driving Level on the monitor. They are pre-adjusted and perform self-calibration from time to time. I really never thought about it before, but now with your simple explanation, I understand this characteristic curve much better. An interesting fact is that on these monitors, the human eye can easily recognize also a single gradation difference (like between 200 and 201, when such areas are large enough or on smooth gradients), therefore we can display the image at 10-bit, and the banding effect disappears, because we have 1024 grays (or per color channel in case of color monitor) gradations on the screen simultaneosly.

04-18-2024 04:51 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@altenbach wrote:

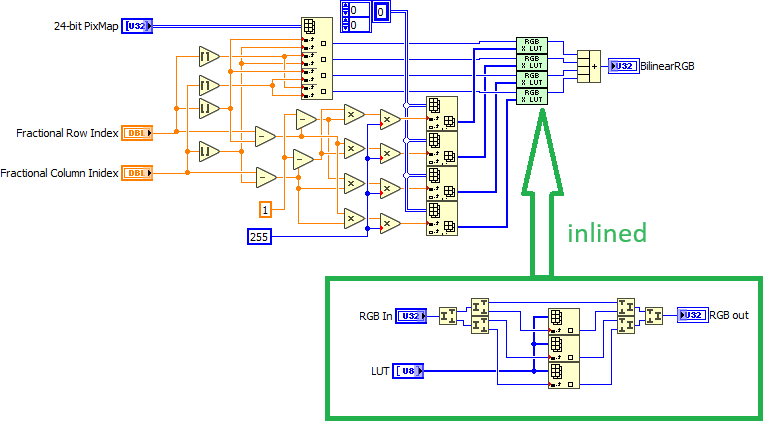

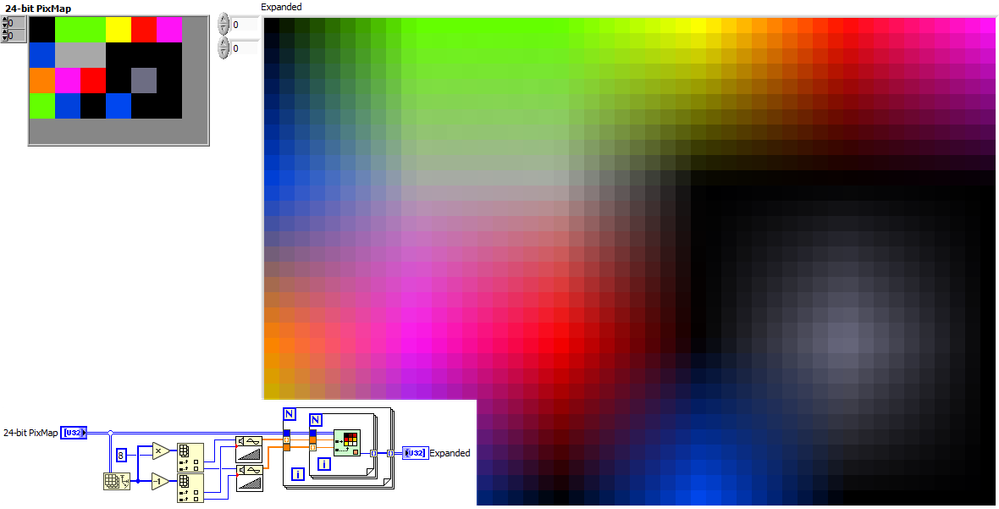

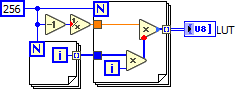

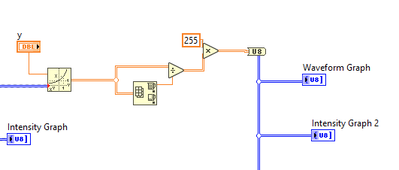

Replacing the detour via DBL multiplication with a LUT approach, gives me about 3-7x speedup over the original code.

(Since the LUT is quantized to U8 values, it does not really need to be very big)

I'll attach some code later.

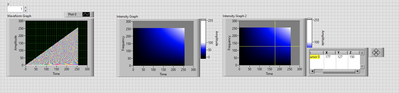

For example, the following 8x expansion takes about 100microseconds on my laptop (same result as yours). I am sure there is some slack left:

The LUT cosntant is created as follows:

It took me a while to realize why the final compound add works with a packed triplet of bytes.

In the code to create the LUT, how did you get the multiply function to output U8s?

04-18-2024 05:39 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@paul_a_cardinale wrote:

In the code to create the LUT, how did you get the multiply function to output U8s?

Right click the node, Properties, then Output Configuration.

04-19-2024 08:03 AM - edited 04-19-2024 08:05 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

what I like about the LUT approach in Message 16 is that it appears that paralleleization can be turned on for the two nested For Loops, and it appears to be significantly faster, than with out

@altenbach wrote:

@paul_a_cardinale wrote:

To be extra fancy, it should take into account gamma (that would probably slow it down to a crawl).In my code, all you probably need is a nonlinear LUT to take gamma into account, but I have not tried that.

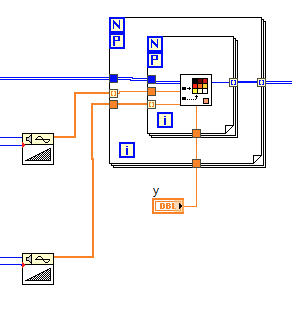

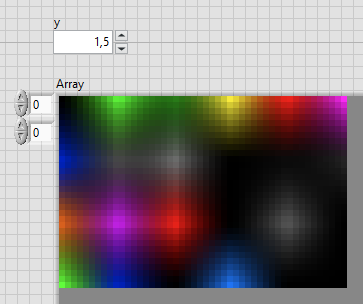

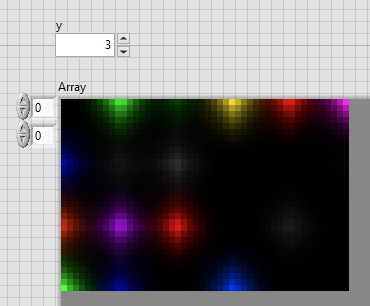

- gamma correction is often just an implementation of Output = Input**(x), where ** means "to the power of"

- we use this to brighten or dark images, so a user can better interpret an image

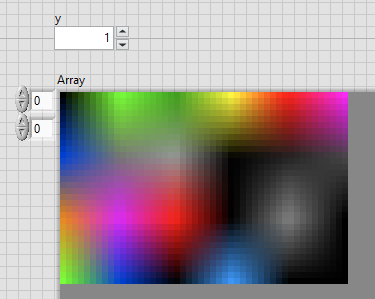

- So, in a very naive way, I would just put in the following , with y limited to 1 to +Inf in order to "darken" the image

- values between 0 and 1 would "brighten" the image, but you have to take some extra care of maximum value overflowing, and I can't remember at the moment, how I have done this before

|

|

|

|

|

|

|

attached as Labview 2020

04-19-2024 08:30 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@altenbach wrote:

@paul_a_cardinale wrote:

To be extra fancy, it should take into account gamma (that would probably slow it down to a crawl).In my code, all you probably need is a nonlinear LUT to take gamma into account, but I have not tried that.

That was my initial thought too. But seems to be more complicated than that. First the gamma has to be backed out, then the weighted average taken, then the gamma reapplied. I think that would take 3 LUTs; and my guess is that they would have to be fatter than 8-bits in order to prevent quantization artifacts.

04-19-2024 08:40 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@alexderjuengere wrote:

what I like about the LUT approach in Message 16 is that it appears that paralleleization can be turned on for the two nested For Loops, and it appears to be significantly faster, than with out

@altenbach wrote:

@paul_a_cardinale wrote:

To be extra fancy, it should take into account gamma (that would probably slow it down to a crawl).In my code, all you probably need is a nonlinear LUT to take gamma into account, but I have not tried that.

- gamma correction is often just an implementation of Output = Input**(x), where ** means "to the power of"

- we use this to brighten or dark images, so a user can better interpret an image- So, in a very naive way, I would just put in the following , with y limited to 1 to +Inf in order to "darken" the image

- values between 0 and 1 would "brighten" the image, but you have to take some extra care of maximum value overflowing, and I can't remember at the moment, how I have done this before

attached as Labview 2020

But we're not talking about applying a gamma.

I want to interpolate pixels. But simply interpolating the tristimulus values is not accurate because those values are not directly proportional to the light levels. The gamma must be backed out, the interpolation performed, then the gamma reapplied; and this must be done for each pixel.

04-19-2024 09:27 AM - edited 04-19-2024 09:33 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@alexderjuengere wrote:

what I like about the LUT approach in Message 16 is that it appears that paralleleization can be turned on for the two nested For Loops, and it appears to be significantly faster, than with out

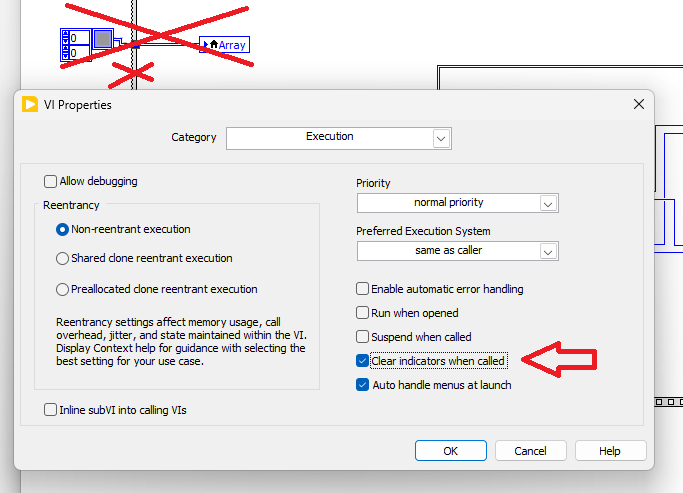

I would strongly recommend to only parallelize the outer FOR loop. Once every core works on one of the inner loop stacks, there is nothing left to further parallelize.

The parallelization overhead is smallest on the outermost loop, but we still need to carefully test everything to make sure it is really faster. For example the parallelization will have many copies of the LUT and the CPU cache will get tighter.

(For example, during testing, I had a version that did the three colors in a parallel FOR loop and it was an order of magnitude slower than the unrolled version.)

As a further comment, you can eliminate the local variable and sequence frame in the caller by just enabling "clear indicators when called".