- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Interpolate Pixel

04-24-2024 03:21 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@altenbach wrote:Nitpicking: To remove some diagram clutter, all we need is a single "1" diagram constant the the "3" can be left unwired. 😄

I also doubt that the "delete from array adds any value, because the deleted element is probably zero.

Oh, I know about this, but sometimes leaving these constants connected (this time the code was quickly copy-pasted just to prove the improvement). In some cases, unwired inputs on array operations can be a kind of indirect "obfuscation", and in this special case, the "3" here indicated (for stupid code reviewer) that the next loop will iterate 3 times.

Anyway, this topic will be incomplete without one more DLL-based attempt. So, I'll take the "Maybe now I'm done" version and implement this 1:1 in C-code.:

#include "framework.h"

#include "IP2D.h"

#define RGB_STEPS 256

#define WEIGHT_STEPS 1024

INT64 LUT[RGB_STEPS]; // Not thread-safe

INT64 WLUT[RGB_STEPS * WEIGHT_STEPS];

static double Gamma = NAN;

IP2D_API void fnLUT(double gamma)

{

for (int i = 0; i < RGB_STEPS; i++) {

LUT[i] = (uint64_t)rint((pow((double)i, gamma)));

for (int j = 0; j < WEIGHT_STEPS; j++) {

WLUT[j + i * WEIGHT_STEPS] = j * LUT[i];

}

LUT[i] *= WEIGHT_STEPS - 1;

}

}

inline BYTE* getpXY(UINT* image, int cols_width, double row_y, double col_x) {

return (BYTE*) & (image[((int)row_y * cols_width) + (int)col_x]);

}

inline UINT binarySearch(INT64 LUT[], INT64 Test)

{

UINT left = 0, right = 255;

while (left <= right) {

UINT mid = left + (right - left) / 2;

if (LUT[mid] <= Test) left = mid + 1;

else right = mid - 1;

}

return (256 == left) ? left-- : left; // if too much, step back

}

// This is an example of an 2D Interpolation with gamma.

IP2D_API UINT fnIP2D(iTDHdl arr, double fCol, double fRow, double gamma)

{

uint32_t* pix = (*arr)->elt; //address to the pixels

int cols = (*arr)->dimSizes[1]; //amount of columns (image width)

// Recompute Gamma if needed:

if (gamma != Gamma) fnLUT(gamma); Gamma = gamma; //Like ShiftReg in LV

// Relative Weights

double ffRow = floor(fRow);

double ffCol = floor(fCol);

double ffRowDiff = fRow - ffRow;

double ffColDiff = fCol - ffCol;

UINT relW0 = (UINT)rint((1 - ffRowDiff) * (1 - ffColDiff) * (WEIGHT_STEPS-1));

UINT relW1 = (UINT)rint((ffRowDiff) * (1 - ffColDiff) * (WEIGHT_STEPS - 1));

UINT relW2 = (UINT)rint((1 - ffRowDiff) * (ffColDiff) * (WEIGHT_STEPS - 1));

UINT relW3 = (UINT)rint((ffRowDiff) * (ffColDiff) * (WEIGHT_STEPS - 1));

// Four Pixels:

BYTE* p0 = getpXY(pix, cols, rint(ffRow), rint(ffCol));

BYTE* p1 = getpXY(pix, cols, rint(ffRow + 1), rint(ffCol));

BYTE* p2 = getpXY(pix, cols, rint(ffRow), rint(ffCol + 1));

BYTE* p3 = getpXY(pix, cols, rint(ffRow + 1), rint(ffCol + 1));

// Target color:

BYTE bgr[3] = { 0,0,0 };

//#pragma omp parallel for num_threads(3) //<-slow down around twice

for (int i = 0; i < 3; i++) {

double res = 0.0;

INT64 sum = WLUT[p0[i] * WEIGHT_STEPS + relW0] +

WLUT[p1[i] * WEIGHT_STEPS + relW1] +

WLUT[p2[i] * WEIGHT_STEPS + relW2] +

WLUT[p3[i] * WEIGHT_STEPS + relW3];

UINT pos = binarySearch(LUT, sum); //Threshold 1D Array analog

if (pos--) res = pos + (double)(sum - LUT[pos]) / (LUT[pos + 1] - LUT[pos]);

bgr[i] = (int)rint(res); // fractional index

} //RGB loop

return (bgr[0] | (bgr[1] << 😎 | bgr[2] << 16);

}

// same as above with Rows/Cols cycles

IP2D_API void fnIP2D2(iTDHdl arrSrc, iTDHdl arrDst, fTDHdl fCol, fTDHdl fRow, double gamma)

{

int Ncols = (*fCol)->dimSizes[0];

int Nrows = (*fRow)->dimSizes[0];

double* fColPtr = (*fCol)->elt;

double* fRowPtr = (*fRow)->elt;

unsigned int* DstPtr = (*arrDst)->elt;

#pragma omp parallel for // num_threads(4)

for (int y = 0; y < Nrows; y++) {

for (int x = 0; x < Ncols; x++) {

DstPtr[x + y * Ncols] = fnIP2D(arrSrc, fColPtr[x], fRowPtr[y], gamma);

}

}

}

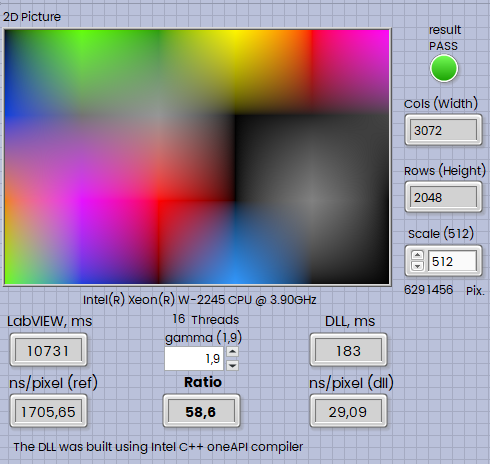

and 'More lookup, less multiply' does the job. When compiled under the Intel OneAPI C++ compiler, it is more than fifty times faster in comparison to the pure LabVIEW code.:

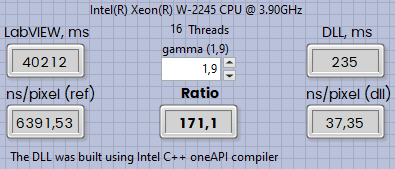

The screenshot above from LabVIEW 2024Q1. If we will take old LabVIEW 2018 32-bit, then performance boost much more significant:

The source code is attached. There are two benchmarks inside — one for the Intel OneAPI C++-compiled DLL and another one for the Microsoft C++ Compiler. If you don't want to install the Intel Runtime, you can play with MSVC (which is around 20 times faster). I checked twice that the debugging is disabled, code is inlined, etc.

04-24-2024 07:25 PM - edited 04-24-2024 07:55 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Well, I couldn't leave well-enough alone.

I wanted to have it use a variant of altenbach's much faster method in cases when the gamma is set to 1.

But something strange is happening. When the gamma is set to 1, this default case doesn't execute (which is correct).

But the mere presence of code in that (unexecuted) case slows it down a lot. If I delete the code in that case, it runs much faster.

Why is it that the presence of code in an unexecuted case slows down the vi?

04-24-2024 11:41 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@paul_a_cardinale wrote:

Well, I couldn't leave well-enough alone.

I wanted to have it use a variant of altenbach's much faster method in cases when the gamma is set to 1.

But something strange is happening. When the gamma is set to 1, this default case doesn't execute (which is correct).

But the mere presence of code in that (unexecuted) case slows it down a lot. If I delete the code in that case, it runs much faster.

Why is it that the presence of code in an unexecuted case slows down the vi?

Well, the only NI can tell you "why". LabVIEW is an optimizing compiler. This means that some optimization steps are internally applied to get "optimal" code, such as constant folding, unused code removal, etc. The code is also split into chunks that can be executed in parallel, then threads are created, and so on. After that, LLVM comes into play, and in the end, the overall execution internally is not as shown on the block diagram. In this particular case, some unnecessary code is definitely executed in the background. The question is — where exactly did the compiler place the "branch" that will switch between the two execution paths?

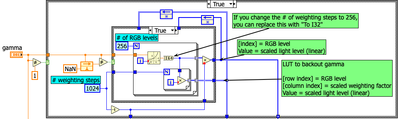

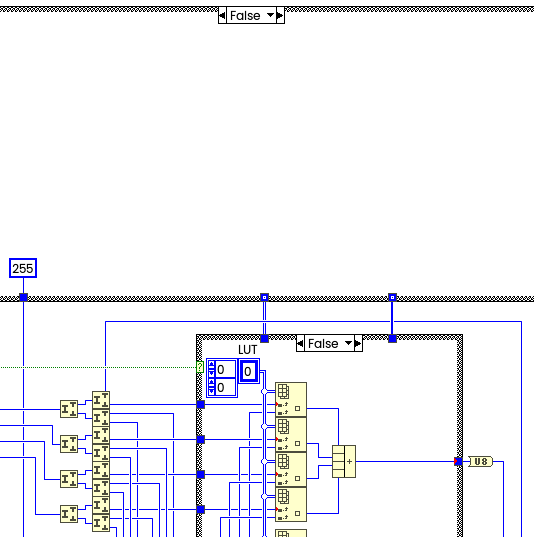

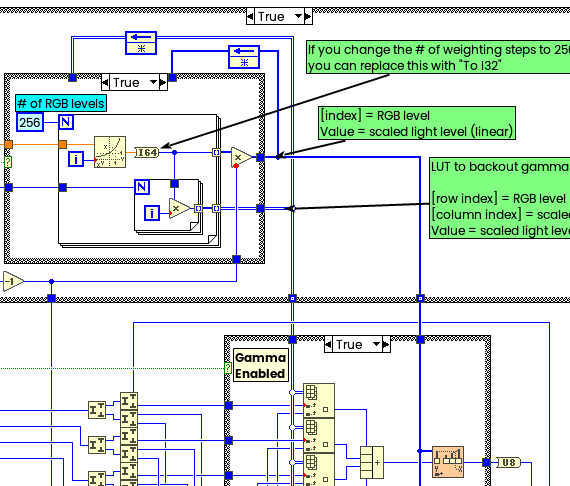

Anyway, after some experiments, I've found that removing this wire is a game-changer — if it's removed, then the code will run as fast as without the case structure.

Probably Shift register causing a problem.

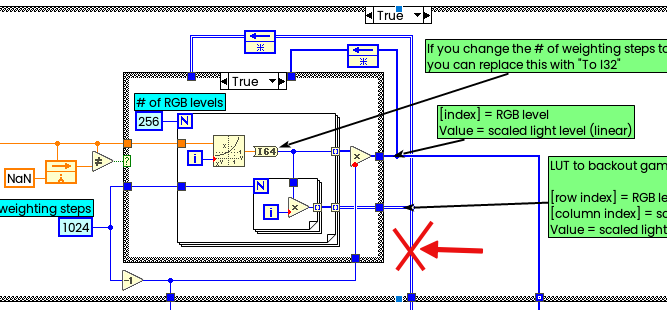

Then obviously you can modify your code to run without gamma as shown, this is your "false" case and the constant moved from upper case to lower, leave output unwired:

And the true case:

Then it works as expected.

Interesting case, good to know, thanks for catching this "issue".

One thing I don't understand. Why are you struggling and fighting with slow LabVIEW code, on the limits of optimization, and not switching to a DLL-based solution? Also, if you don't have Visual Studio Professional and don't want to stay dependent on the Intel Compiler, then almost any C compiler may give you much better performance (especially in the case "with gamma"). Again — the LabVIEW compiler is not as optimal as MSVC or Intel for many reasons, and it's not as good at SIMD or vectorization, just accept this fact. In some cases its may be faster, but not in your case. If you will take a look into most of math SubVIs like mean, median, etc - you will see DLLs calls inside to lvanlys.dll and not native pure LabVIEW code. A long time ago, I disassembled compiled LabVIEW code (it was LabVIEW 6.1, and it was relative simple), and I checked at the assembler level which machine instructions were behind the for loops and case structures. I noticed a lot of "overhead" - a C compiler outputs just 10-15 machine code instructions, but LabVIEW may produce 30-40 instructions for the same code. For example, if you try to access in C an array out of bounds, you will get a hard exception, but if you call the Index Array function in LabVIEW with an index out of the array's range, nothing happens, no crash, no exception. And this bounds checking is not "for free"; it slows down your code. And so on. May be I'll repeat the disassembling exercise with LabVIEW 2024, but it's harder because the code is "packed" nowadays. It's not as easy to extract as before, but impossible is nothing.

04-25-2024 09:33 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@Andrey_Dmitriev wrote:One thing I don't understand. Why are you struggling and fighting with slow LabVIEW code, on the limits of optimization, and not switching to a DLL-based solution?

You make it sound like another development tool (esp. a compiler) is a picnic.

If you're in a team (esp. a team of LabVIEW developers), adding the need for any additional tool (esp. a compiler) is a nightmare.

There's a balance between execution speed and development speed. If you (and\or your team) are not familiar with other compilers, there's a bias towards LabVIEW.

04-25-2024 09:43 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

It's interesting and a little shocking that the difference is as big as it is. LVs compiler is pretty good, but apparently not good enough. It's also interesting to see all the little tweaks to the code and see the results.

04-25-2024 09:45 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@Andrey_Dmitriev wrote:

One thing I don't understand. Why are you struggling and fighting with slow LabVIEW code, on the limits of optimization, and not switching to a DLL-based solution?

I do most of my imaging work on a Mac.

04-25-2024 10:03 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

It appears not to be the shift register, but rather the existence of the data. If I replace the code that would generate the arrays with just array constants (for one specific gamma), it still runs slow.

Right now, my best guess is that when the compiler sees the array output tunnels of the case structure, tries to figure out how big they would be, then creates some code that dynamically allocates that much space.

I can think of 4 possible ways to correct this (but I don't like any of them).

- Polymorphic. Kind of klunky and cumbersome.

- Malleable. The gamma terminal as an integer. When unconnected, it uses the simple code (gamma = 1), when a double is connected, it uses the code to correct for gamma. What I don't like about this is that if you right-click on the gamma terminal of an instance, and select Create Constant, you don't get a double.

- Express VI. The gamma would be specified by the configuration (instead of a conpane terminal). The down side is that due to the quirkiness of Express VIs, I would have to create an installer for it.

- XNode. Such a headache,

04-25-2024 10:16 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@paul_a_cardinale wrote:

@Andrey_Dmitriev wrote:

One thing I don't understand. Why are you struggling and fighting with slow LabVIEW code, on the limits of optimization, and not switching to a DLL-based solution?

I do most of my imaging work on a Mac.

Ah, OK, now it's clear why the VS Solution is useless for you.

Well, in theory you can do the same and call the OS X shared library (which is called a Framework), which you can build from Xcode (or gcc?). It is a very interesting exercise, where I can't help much, because unfortunately I don't have a Mac in my hands. I would like to recommend you to give a try at least. I think Rolf Kalbermatter may have more experience in this area and could help may be.

04-25-2024 11:14 AM - edited 04-25-2024 11:38 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

wiebe@CARYA wrote:

@Andrey_Dmitriev wrote:One thing I don't understand. Why are you struggling and fighting with slow LabVIEW code, on the limits of optimization, and not switching to a DLL-based solution?

You make it sound like another development tool (esp. a compiler) is a picnic.

If you're in a team (esp. a team of LabVIEW developers), adding the need for any additional tool (esp. a compiler) is a nightmare.

There's a balance between execution speed and development speed. If you (and\or your team) are not familiar with other compilers, there's a bias towards LabVIEW.

No, where I wrote that this is "a picnic"? Mixed development environment is a hard job with additional dependencies, long debugging stories and all such things.

Slightly offtopic, but anyway, if the team is working with high-performance computations, then some members should be totally familiar with the appropriate and most suitable modern tools and frameworks. And in my humble opinion, every professional software developer who is working with LabVIEW (especially in the machine vision area) should be able to create a simple shared library, pass native LabVIEW types to it, and perform simple computations inside as part of skills. I will never recommend starting with LabVIEW if the student doesn't know the C Programming Language (K&R is still an amazing book). Personally, I started my learning with Fortran, then Pascal, Modula-2, then C and Delphi, and only after that did I touch LabVIEW (v.6.0 mid 2000), so I was 'mentally prepared' for this "hell" (yes, LabVIEW6.0i was just hell), and still continuously learning, currently C#, Python, and Rust. And take a note — I have M.Sc. in Physics, not in CS. Hardware is another point — every good software engineer should understand how the CPU works, how the cache is organized, what a cache line is, how the OS works, virtual memory and paging, and so on (Andrew Tanenbaum is a 'must-have' on every bookshelf). In general, not everything needs to be highly optimized, moreover, 'premature optimization is the root of all evil,' as Donald Knuth wrote, but above I've demonstrated how to achieve the same result 50-70 times(!) faster, and sometimes this is really needed (but not always). For example, my project from 2023 included four high-speed cameras (running synchronously at 12800 FPS), acquiring roughly 600+ GB of data in a few seconds, which needs to be stitched together with remapping and smooth invisible overlapping, two steps of flat field correction, and writing results in different formats. The postprocessing should be done in a minutes and not hours. Everything was written in LabVIEW, based on IMAQ Images, geometrical calibration in VDM - rocks, remapping was done using Intel IPP, video writing using OpenCV, and some 'bottlenecks' were rewritten in C using AVX intrinsics. So, I've used three libraries and two programming languages. In additional, real-time sync part was done using cRIO with FPGA and LabVIEW RT. Achieving required performance with LabVIEW's arrays and G code only? No way. So, it always depends. LabVIEW is amazing tool, I totally fallin' in love, and you're perfectly right, "there's a balance between execution speed and development speed", but there are no reasons do not learn other programming languages and combine if needed, otherwise you may lost lot of potential of the computer. Nowadays, they're extremely fast, but I still remember my PDP-11. And we haven't touched on the GPU topic yet... 😉

04-25-2024 03:14 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I'm not a professional anymore; I'm retired.

I just dabble in this stuff in an attempt to reduce the rate of brain decay.