- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

PXI-6602 Counter Input "-200141 Data Overwritten Error"

04-26-2024 02:12 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

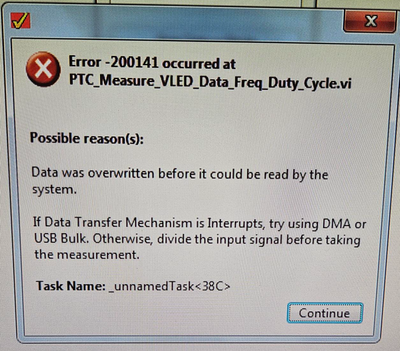

I am using a counter input on the PXI-6602 card to read of an external signal frequency. The frequency is 830KHz. The majority of the time I am able to read back the frequency without any issues but about 10% of the time when I request a frequency read I get the following error:

By default the DAQmx channel runs as DMA so that didn't help the issue. Here is a code snippet...

How can I resolve the intermittent "data overwritten" error? Thanks!

04-26-2024 02:46 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Short answer: quite possibly, you can't.

Longer answer: the source of this problem is a combo of the tiny 2-sample FIFO on the 6602 and the use of the shared-access PXI bus. You're running into a PC system limitation. About all you can hope to do is find ways to reduce other usage of the PXI bus while this task runs, but even that may not end up helping enough to prevent the error.

The best solution would be to use a newer DAQ device with a DAQ-STC3 timing chip (such as the PXIe-6612, or any PXIe-63xx X-series multifunction device). These would give you a much bigger FIFO and dedicated access to on a faster bus.

Alternately, you can try the suggestion in the error text to divide the signal to create a lower frequency, then scale it back up. This will limit your measurement resolution, but that's likely to be a better tradeoff than a fatal error that prevents you from getting any measurement at all.

An easy way to divide by 4 or more is to set up a continuous counter pulse train using the external signal as the "timebase source". Define the pulses in units of "Ticks" and set Low, High, and Initial Delay (just to get in a good habit) to at least 2 each. The sum of Low + High will be the integer division factor.

Use a different counter to measure the frequency of that first counter's output, then scale by the sum of Low + High to calculate the original frequency.

-Kevin P

04-29-2024 09:29 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@Kevin_Price,

Thanks for the thorough explanation. Since I am limited to the use of the PXI-6602 card for now, I was thinking of creating a DAQmx channel that counts the counter input edges and then divides that by the time of the data sampling to get the frequency. Are there any other alternatives to get around the 6602 card buffer deficiency? Thanks!

- Tags:

- @Kevin_Price

05-07-2024 10:04 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

No, the buffer deficiency is fixed in the hardware. It *will* put an upper limit on your sustainable sample rate. The two main methods to reduce the sample rate are either my earlier suggestion to divide down the original pulse train or your idea to simply count the pulses during fixed intervals. As per usual, there are tradeoffs.

A. Original measurement: sample implicitly at 830 kHz, the rate of the original signal. When you don't error out, you get 830 kiloSamples/sec, each of which can have ~1% quantization error due to the 80 MHz onboard clock.

B. Divide down by (let's say) a factor of 10. Sample implicitly at 83 kHz, giving you 83 kiloSamples per sec, each with ~0.1% quantization error. You're essentially getting an average measurement across 10 consecutive intervals, so you lose some ability to see high-speed dynamics in return for your improved % error.

C. Count pulses over fixed intervals. Sample *explicitly* at (let's say) 1000 Hz, giving you 1 kiloSample per sec, each with ~0.12% quantization error (1 part in 830). Again, you're essentially getting another average measurement, but now it's across a fixed amount of time rather than a fixed # of original pulses.

In general, different measurement methods let you trade off sampling rates (and thus data bandwidth) with quantization error, and they aren't entirely equivalent. Compare B & C above where both yield approximately the same % error but with quite different sample rates. Out of apps that demand that kind of % error, some may prefer the higher rate with more data and more of the higher speed dynamics, others may prefer the lower rate with less data and dynamics.

-Kevin P