From 11:00 PM CDT Friday, May 10 – 02:30 PM CDT Saturday, May 11 (04:00 AM UTC – 07:30 PM UTC), ni.com will undergo system upgrades that may result in temporary service interruption.

We appreciate your patience as we improve our online experience.

From 11:00 PM CDT Friday, May 10 – 02:30 PM CDT Saturday, May 11 (04:00 AM UTC – 07:30 PM UTC), ni.com will undergo system upgrades that may result in temporary service interruption.

We appreciate your patience as we improve our online experience.

06-14-2010 05:12 PM

High Performance Analysis Library 2.0 is available now. New features includes:

Please visit NI Labs to download the installer.

06-15-2010 02:10 AM

Hi,

Could you make the library available on the NI ftp site as well? When I download the zip file, the firewall of my company replies with:

The page you've been trying to access was blocked.

Reason: Active content was blocked due to digital signature violation. The violation is Missing Digital Signature.

Transaction ID is 4C1720598AB6DD0AAF94.

Renaming the .exe file in the zip to something like .ex_ or use a different compression method (7zip, rar) might help as well.

Regards,

Chris

06-15-2010 10:48 AM

Hi Chris,

It is on the NI ftp now. The download url is: ftp://ftp.ni.com/pub/devzone/NI_Labs/HighPerformanceAnalysisLibrary2_0Installer.zip.

Please feel free to let me know if you have more questions.

Regards,

Qing

06-15-2010 06:23 PM

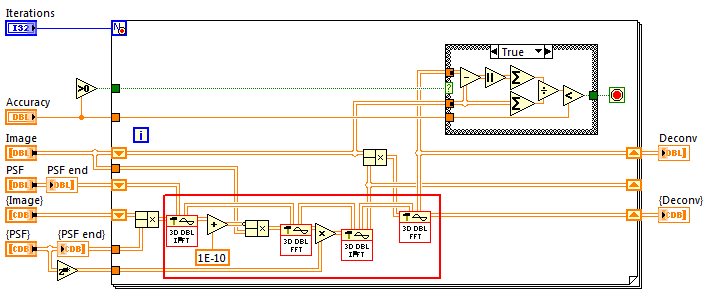

This is looking great, thanks! Great to have 3D FFTs (at last!) natively and fast in LabVIEW, and I'm also impressed to see SGL versions of all the routines. I've quickly benchmarked the 3D FFTs and see a similar improvement over the ASP routines as for 2D on a 2-core machine. Nice!

It does raise a few questions for me:

.png)

Again, many thanks for this. It looks very useful, even at the current level of implementation.

06-22-2010 11:05 PM

One further issue I've noted is that the 3D FFT routines are lacking the fftSize parameters.

09-28-2010 11:30 AM

Is there a plan to migrate the High Performance Analysis Library to the x64 platform to be compatible with LV x64 2010? If so, that would be great... Also, if there IS a plan, is there an approximate time frame as to when it's likely to occur?

09-28-2010 11:44 AM

Yes. 64-bit HPAL is on our plan. But I do not have the exact time when you will see it. This is a very new library, and too many stuffs could be added ...

By the way, we will have another release very soon. Thanks for your attention. Hope to hear more feedback from you.

11-23-2010 03:37 AM

@duetcat:

Could you please explain what you mean with "very soon"? What are the changes in the new release?

11-23-2010 09:18 AM

It was released. The main feature is sparse matrix functions. Here is the link:

http://decibel.ni.com/content/docs/DOC-13895

The next version is under development. Please feel free to send us your suggestion. Thanks.

08-10-2011 09:12 PM

Just doing some more benchmarking, this time of the Dot Product function. For my calculations, this High-Performance function is slightly slower than the builtin Linear Algebra function (LV 2010 and 2011), and considerably slower (3-4x) than the equivalent Multiply-Add for vectors smaller than 200. All techniques show a speed increase when used inside a parallel loop, but the order is still the same.

Have you benchmarked all of the functions against their builtin equivalents?