- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- « Previous

- Next »

Organize Library Member VIs in the Project, Not on Disk

10-09-2023 11:24 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I'm sorry it came off that way Steen. That was not my intent.

I was simply giving you the same advice I would give to anyone else who posted similar code to the forums. I stand by it. I think it's good advice.

Of course, it was unsolicited advice so you can feel free to ignore it, if you prefer. I won't be offended.

CLA, CPI, CTD, LabVIEW Champion

DQMH Trusted Advisor

Read about my thoughts on Software Development at sasworkshops.com/blog

10-09-2023 12:46 PM - edited 10-09-2023 01:40 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@Taggart wrote:

@joerg.hampel wrote:

For the record, I don't agree with this recommendation, either. In my opinion, it makes perfect sense while working in the IDE (i.e. doing the programming) but does not take into account managing resources in SCC.

At HSE we have a rule of storing all manually created VIs for a DQMH module in a subfolder on disk, independently of where they are stored inside the library. This makes it much easier to understand the nature of resources while outside the IDE.

I'm curious why you need to "understand the nature of resources while outside the IDE"

Can you elaborate?

No one has still answered my core question... I have some ideas where that might be useful, but I'm curious to hear what people are actually using disk folders for? I've heard to "keep things organized". I'm all for organization, but what greater purpose does it serve? What does it allow you to do that you can't do with a flat folder?

I have a few ideas of my own, where it might be more useful, but I'm curious to see what people are actually using that organization for.

The only useful thing I've seen so far is Steen suggesting that folders on disk might make it easier to refactor a library into several smaller libraries. I agree with that as a starting point for identifying that it needs split and perhaps identifying how to split things up. I'm not sure it is necessary though.

I would probably prefer to solve that problem in the IDE.

My way of doing that would be.

- create 2 seperate virtual folders in the library I want to split. label 1 to keep and the other to move

- move all VIs into 1 of the 2 virtual folders.

- create the new library in the project

- move the contents of the to move virtual folder to the new library and delete the now-empty to move virtual folder

- highlights those vis in the project - go to files screen and drag them into the correct folder on disk (same as the new lvlib).

- then move contents out of the to keep virtual folder in the original library and delete it because it is now empty.

- save all (and maybe mass compile).

Note I can do all that without any disk folders, so again not saying it is wrong, but trying to understand what the value is?

It is really just different ways of working. Not anything wrong with doing what Steen suggests, just different. Maybe I'm just thick, or maybe we just value different things and I'm just not seeing it. I'm just not seeing the value proposition of adding these extra folders on disk.

This example reminds me that might be a good refactoring tool. Something like extract library. You give it a new path and select which methods you want to move into the new library and it moves them all (maybe an option to copy instead of move if you want both libraries to keep a copy of a particular VI).

Curious - would anyone find that useful?

CLA, CPI, CTD, LabVIEW Champion

DQMH Trusted Advisor

Read about my thoughts on Software Development at sasworkshops.com/blog

10-09-2023 04:58 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@Taggart wrote:

@Taggart wrote:

@joerg.hampel wrote:

This makes it much easier to understand the nature of resources while outside the IDE.

Can you elaborate?

No one has still answered my core question...

Sorry, Sam, we're super busy these days with customer work and it's hard to find time for the forums (I'm typing this message at midnight).

We've seen quite often that new users of DQMH get creative in reworking the structure of a DQMH module, both in the IDE and on disk. As we know, moving framework resources into different locations rarely is a good idea.

Giving people a simple guidance system as to what they can reorganise (i.e. all the resources they created manually) vs. what they must not touch at any time (anything created automatically via the scripting) has really made a difference in keeping code from getting broken.

In my experience, it is *not* enough to give that guidance system in the IDE only. People will look at source files in the file system, to duplicate code or reorganise it, or maybe even only to understand it better. It also happens frequently that we look at the git history to figure out what happened. Git does not show us the inner workings of a library or class, we only see the file system paths.

A clear separation between framework code and user code helps with various tasks: Refactoring user code, duplicating user code, removing user code, even switching frameworks.

I understand and appreciate that maintaining structure in two different places adds complications. I don't want to talk anybody into changing their way of working. In my experience and personal opinion, and for our use case - working with teams of developers with varying degrees of experience - the approach of maintaining a certain degree of structure on disk turns out to be the lesser of two evils.

As so often, what works best for Darren or Sam or Steen or all the other prolific people reading and posting here just cannot be rolled out into the wild and just is not applicable to the average LabVIEW user who does not do software development in a full-time position and maybe only gets to work on their code once in a blue moon.

DSH Pragmatic Software Development Workshops (Fab, Steve, Brian and me)

Release Automation Tools for LabVIEW (CI/CD integration with LabVIEW)

HSE Discord Server (Discuss our free and commercial tools and services)

DQMH® (The Future of Team-Based LabVIEW Development)

10-09-2023 08:10 PM - edited 10-09-2023 08:14 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@joerg.hampel wrote:

@Taggart wrote:

@Taggart wrote:

@joerg.hampel wrote:

This makes it much easier to understand the nature of resources while outside the IDE.

Can you elaborate?

No one has still answered my core question...

Sorry, Sam, we're super busy these days with customer work and it's hard to find time for the forums (I'm typing this message at midnight).

We've seen quite often that new users of DQMH get creative in reworking the structure of a DQMH module, both in the IDE and on disk. As we know, moving framework resources into different locations rarely is a good idea.

Giving people a simple guidance system as to what they can reorganise (i.e. all the resources they created manually) vs. what they must not touch at any time (anything created automatically via the scripting) has really made a difference in keeping code from getting broken.

In my experience, it is *not* enough to give that guidance system in the IDE only. People will look at source files in the file system, to duplicate code or reorganise it, or maybe even only to understand it better. It also happens frequently that we look at the git history to figure out what happened. Git does not show us the inner workings of a library or class, we only see the file system paths.

A clear separation between framework code and user code helps with various tasks: Refactoring user code, duplicating user code, removing user code, even switching frameworks.

I understand and appreciate that maintaining structure in two different places adds complications. I don't want to talk anybody into changing their way of working. In my experience and personal opinion, and for our use case - working with teams of developers with varying degrees of experience - the approach of maintaining a certain degree of structure on disk turns out to be the lesser of two evils.

As so often, what works best for Darren or Sam or Steen or all the other prolific people reading and posting here just cannot be rolled out into the wild and just is not applicable to the average LabVIEW user who does not do software development in a full-time position and maybe only gets to work on their code once in a blue moon.

SpoilerOn a side note, #teamhampelsoft discussed this briefly today and we all agree that each of us benefits from that separation on disk - we wouldn't want to miss it, and we *are* full-time developers 🙂

Sorry Joerg. didn't mean to make too much extra work for you. Your explanation makes sense and is kind of along the lines of what I was thinking.

Although one potential benefit I could think of that you didn't explicitly mention was running VI analyzer. I find the scripted DQMH VIs often fail my VI analyzer tests. If they were in their own separate directory I could just exclude them. I'm not convinced that a separate folder in the file system is the only way to do that, but it would make it easier.

CLA, CPI, CTD, LabVIEW Champion

DQMH Trusted Advisor

Read about my thoughts on Software Development at sasworkshops.com/blog

10-11-2023 03:56 AM - edited 10-11-2023 04:08 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@Taggart wrote:

I'm sorry it came off that way Steen. That was not my intent.

I was simply giving you the same advice I would give to anyone else who posted similar code to the forums. I stand by it. I think it's good advice.

Of course, it was unsolicited advice so you can feel free to ignore it, if you prefer. I won't be offended.

Thank you Sam, I'll pick it up again. Unsolicited advice is sometimes the best, since often we are not aware of what the real question might be. If others can spot that, all the better. My issue was how your reply was phrased: It was a 100% categorization of the code as garbage, and 0% thought given to the example (hey, HAD the code not been garbage, would the segregation into a family of functions per group have had any value on the disk as well?).

For instance, I do not dismiss the immense value of Caraya because it is 409 files in one library. I do not care about that, I use it happily and am glad I do not have to install 30 packages to start using Caraya. When they have 409 files on disk, do they then get value from organizing them in folders? Yes, I believe they do. I would need that disk organization, if I were to manage a 409-file library.

Caraya could have been split up into multiple libraries, perhaps 30, and stayed in one package. But that's also not easily manageble in LabVIEW. Nested libraries pose many of the same problems when you move stuff around, as trying to keep lib and folders in sync: If you have a folder per library, then if you move a file from one lib to another, now you have a library pointing into another library's folder. Most probably, Caraya is evolving. They have recently disconnected from OpenG dependencies, perhaps these 409 files is just a step of the way. Much code is like that (constantly evolving).

Trying to minimize the waffle, I see two subjects - the first is the primary topic of this thread, the latter has to do with my example code that you prefer to split up (and I don't necessarily disagree with your points on that, in general):

1. Source file organization on disk

I think of this quote by Abelson and Sussman: “Programs must be written for people to read, and only incidentally for machines to execute”.

I believe that Darren's proposal to organize code in the library only, and keep a flat folder structure, is mainly due to tool limitations creating problems for us (which is a VERY real consideration, I'm definetely not contesting that or Darren's proficiency). If disk, project, and SCC organization were automatically kept in sync by our tools, I think that people would prefer to have them in sync. We can test this hypothesis with a couple of questions:

- Why do we require meaningful file names on disk, but not a meaningful folder structure?

- Why do we see value in library organzation, couldn't we keep that a flat structure as well?

Imagine files in the library were labelled as part of the library creation instead of being stuck with their file names. Would we then recommend people to name their files on disk "1.vi", "2.vi" etc, to give the library creator maximum freedom in labelling files for your use? I'd guess not, and the reason is the same as why I prefer a disk organization: I need all the help I can get while building my library. I go looking in the "Array" folder when I want to add some array functions to my library. Whenever a person needs to find the right piece of code, it should be easy to identify. Name and grouping helps identify function and context. And we do go looking on disk for stuff, not least when we build and maintain our library. But also for other activities, like learning about the code and small edits and big refactors.

2. "Single responsibility" of code in libraries

As mentioned I have considered splitting the shown scripting package up into multiple packages. But not 13. Perhaps "Icon" would be a good subject for separation. Several of the other folders/groups do belong together, they would become fairly useless without each other (for instance things that use GObject). If you needed to do something yourself with "GObject", and it wasn't on a block diagram or a front panel, then, agreed, you would need to install this scripting package that also dragged a couple of FP and BD functions along with it. Is that a good tradeoff? I think so, because probably >99% of use cases you would be dealing with FP or BD as you operate on GObjects. In some of the remaining few use cases, you might find out along the way that you need an FP or BD function afterall, and now it's right there for your discovery, rather than you re-implemented it yourself because you didn't know something was already made that fit your "GObject" library's API like a glove.

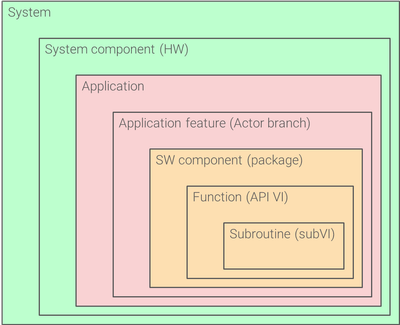

This is a balance of course, and it can be struck several ways without being dead wrong. I'm a huge fan of single responsibility, also outside OO. As I've mentioned earlier I have presented on that many times in the past. I often illustrate it with a view like this:

The (single) responsibility changes with scope

In my experience the cohesion balance depends on what (and for whom) I'm building: In large parts of the responsibility scope we talk about Components. Look hard at the Interface Segregation Principle, and you fare well with creating components. Sometimes you are creating infrastructure that is not a single component, then it can be favorable to divide into Layers (plain inheritance, templates, factory bases and so on). Some like to divide into Types (subVIs, accessors, typedefs etc.), which in my mind is an antipattern because it does not add information but instead hides complexity. We don't have one place where we put all our Excel files either, right? Another type of code segregation I work alot with, is the Toolbox. A library of complementary reuse VIs that are in this collection not just because they share one single functionality, but because they are often used together. A "toolbox" may seem to stretch the single responsibility paradigm, but it's just a different level of single responsibility: It gives you the tools to work easily with Scripting (for instance). Within that "Scripting" scope, there are lower levels of stuff that also have a single responsibility in their own right: Stuff for scripting on VIs, stuff for scipting on Libraries, stuff for scripting directly with a GObject and so on. But splitting a toolbox into 13 toolboxes could be counterproductive toward making it easy for someone to use that toolbox in a cohesive way. Of course, at any point in time a toolbox might need to be split up as well, and that's why refactoring is (or should be) a common practice amongst programmers: Refactor exactly when you discover it's beneficial. And for refactoring, a clear disk organization really helps me out.

10-11-2023 08:37 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@SteenSchmidt wrote:

@Taggart wrote:

I'm sorry it came off that way Steen. That was not my intent.

I was simply giving you the same advice I would give to anyone else who posted similar code to the forums. I stand by it. I think it's good advice.

Of course, it was unsolicited advice so you can feel free to ignore it, if you prefer. I won't be offended.Thank you Sam, I'll pick it up again. Unsolicited advice is sometimes the best, since often we are not aware of what the real question might be. If others can spot that, all the better. My issue was how your reply was phrased: It was a 100% categorization of the code as garbage, and 0% thought given to the example (hey, HAD the code not been garbage, would the segregation into a family of functions per group have had any value on the disk as well?).

For instance, I do not dismiss the immense value of Caraya because it is 409 files in one library.

I didn't mean to imply it was garbage.

To your example of Caraya: Tools don't have to be perfect to provide value. I use a variety of open source tools. When I look at the source code, some of them are very well written in exactly the manner I would have chose to write them. Many aren't. The source code for some of them makes me cringe a bit. They do some things in ways that I would consider very poor style. As long as they are functional and do what they purport to do in a performant enough manner for what I am using them for, I still use them because they provide some value and it's not worth rewriting them myself. I also have some internal tools that I have written in the past that could be better, but again it's providing value as is, so is it worth the investment?

As I always tell my consultant clients, if you are happy with what you are doing now and the results you are currently getting, well then keep doing it. Doesn't necessarily mean there isn't a better way.

I guess part of the reason I am struggling with seeing the value with this is that I don't often see myself interacting with source files outside of the IDE.

The first time I tried to move a VI on disk outside of LabVIEW didn't go well. When I opened my project LabVIEW complained it couldn't find it. I quickly learned like most of us have to do those manipulations in the files view inside the project. The only time I really use the file system to manipulate files outside of LabVIEW is for Git.

CLA, CPI, CTD, LabVIEW Champion

DQMH Trusted Advisor

Read about my thoughts on Software Development at sasworkshops.com/blog

10-11-2023 07:28 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@SteenSchmidt wrote:

@Taggart wrote:

I'm sorry it came off that way Steen. That was not my intent.

I was simply giving you the same advice I would give to anyone else who posted similar code to the forums. I stand by it. I think it's good advice.

Of course, it was unsolicited advice so you can feel free to ignore it, if you prefer. I won't be offended.

Another type of code segregation I work alot with, is the Toolbox. A library of complementary reuse VIs that are in this collection not just because they share one single functionality, but because they are often used together. A "toolbox" may seem to stretch the single responsibility paradigm, but it's just a different level of single responsibility: It gives you the tools to work easily with Scripting (for instance). Within that "Scripting" scope, there are lower levels of stuff that also have a single responsibility in their own right: Stuff for scripting on VIs, stuff for scipting on Libraries, stuff for scripting directly with a GObject and so on. But splitting a toolbox into 13 toolboxes could be counterproductive toward making it easy for someone to use that toolbox in a cohesive way. Of course, at any point in time a toolbox might need to be split up as well, and that's why refactoring is (or should be) a common practice amongst programmers: Refactor exactly when you discover it's beneficial. And for refactoring, a clear disk organization really helps me out.

That totally makes sense. I wonder if there is another way to package of bunch of tools (individual libraries) into a toolbox? My inclination would be to use VIPM for that. Have one VIPM package that is the toolbox and installs several libraries. And to make it easier to use, maybe they all share a common pallette? Just thinking out loud.

CLA, CPI, CTD, LabVIEW Champion

DQMH Trusted Advisor

Read about my thoughts on Software Development at sasworkshops.com/blog

- « Previous

- Next »