- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

40 core machine has 16 idle cores when using parallel loop? Labview 14.0f1(64 bit)

09-04-2015 09:35 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi, first post, bear with me.

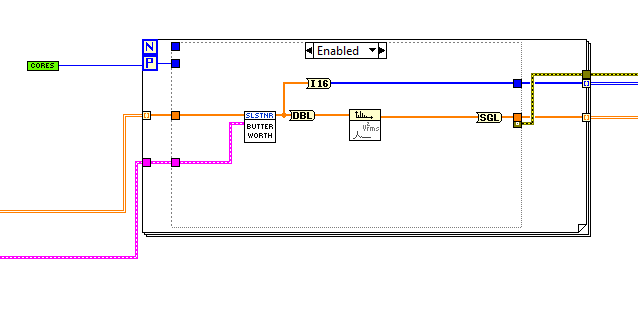

I'm trying to run a butterworth filter and an auto power spectrum of a large 2D data set (20 million points minimum) and have attempted to use the parallel 'for' loop with minimal luck; oit's much faster than an unparallelized 'for' loop but doesn't use anywhere enough of the CPU.

I am running my application on a high-spec PC with 2x Xeon E5-2690 v2 processors @ 3GHz, each with 20 cores, and when my application runs I see that 16/40 cores are seemingly idle and the CPU usage never goes higher than 10%. The number of parallel instances is set to 64 and i have a CPU Info.vi that I am using to read out the number of processors. I'm subtracting 2 from the number of processors so that there is still space for the rest of the application to run. The butterworth vi is set to preallocated clone and debugging is disabled for both this and the calling function.

Here's a pic of the code:

I suppose my question is: What am I doing wrong?. The pink wire is a settings cluster that I use across the entire application. Is this holding the parallel loop? Surely it uses the same settings for each iteration and therefore doesn't need to wait?

09-04-2015 09:45 AM - edited 09-04-2015 10:01 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Your CPUs only have 10 real cores, so all you have is 20 real cores total. (Your cores are hyperthreaded for 40 virtual cores total which gives you a slightyl higher advantage).

In the most ideal case, you should get a 20x speedup.. Ho big is the dataset? How long does it take to execute one iteration? How fast is it when you force serial execution? How many iterations do you do (Ii.e. how many rows in your input dataset)? Do you really need all these type conversions?

My machine is a dual E5-2687W (16 real cores (32 virtual cores) and I get a ~17x speedup when using all cores. The problem is such that each execution is relatively slow (~20ms) so the parallelization overhead is minimal. Here are some benchmarks.

09-04-2015 10:10 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Without the parallel loop each run takes ~450ms, with the parallel loop it takes ~180ms so I'm only getting about a 2.5x speedup. The dataset is 20000x1000 so 20 million points (as mentioned).

I should have mentioned that none of the cores are maxed out and only 4 are greater than 33% usage.

I've had a look at your benchmarks before and I can't see how to apply the same principles to my application. There's no chance of using memoization as the data coming in is almost always different and I don't understand how else you have controlled the cache.

How can i make use of the virtual cores?

The odd thing is this code runs just as fast on my laptop (i7-3720QM @2.6Ghz) which has 8 cores, all of which never use more than 33% each, but at least they're all used.

09-04-2015 10:16 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

To answer the rest of your questions: I have 1000 rows/iterations, the data type changes can be moved elsewhere but I'd be surprised if that is what's stinging the parallelization and I don't know how to force serial execution... I'll look into that now.

09-04-2015 10:18 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Oh I see, you mean the ability to force iterations sequentially.

That takes me back to ~450ms per run or ~450us per iteration.

09-04-2015 10:19 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@bory wrote:

To answer the rest of your questions: I have 1000 rows/iterations, the data type changes can be moved elsewhere but I'd be surprised if that is what's stinging the parallelization and I don't know how to force serial execution... I'll look into that now.

Well, you set the number of parallel instances to 1. 😄 I think you already did that.

09-04-2015 10:27 AM - edited 09-04-2015 10:28 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@bory wrote:

The dataset is 20000x1000 so 20 million points (as mentioned).

Your CPUs have 25MB of cache.

With 20 million DBL and SGL and I16 points cocurrently being in memory (14 bytes total per point!, i.e. 280MB or ~10x the cache!) plus all the storage for intermediary results in the subVIs, etc., You are way exceeding the CPU cache and a lot of time is probably spend showeling data around. Just guessing. I bet your CPU cores are staved for data.

09-04-2015 10:51 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@bory wrote:

I'm subtracting 2 from the number of processors so that there is still space for the rest of the application to run.

...

The butterworth vi is set to preallocated clone and debugging is disabled for both this and the calling function.

I would not subtract the two. See if it makes a difference.

Have you tried inlining the subVI?

Can you attach the code shown?

09-04-2015 11:01 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I'll do this on Monday. It's pubtime here in Blighty ![]()

Thanks for your suggestions so far.

09-04-2015 11:07 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Have a nice weekend! 😄

On Monday, also try to figure out the relative time for the two main operations. Maybe it would be more efficient to do them in two sequenctial parallel loops each. For exmple the first one is 2D SGL in and 2D SGL out, so maybe inplaceness can be improved. Also look at the buffer allocation tools.