- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- « Previous

- Next »

CPU update from 4770k to 13900K but not much increase in speed in a data processing VI

Solved!09-08-2023 11:22 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@obarriel wrote:

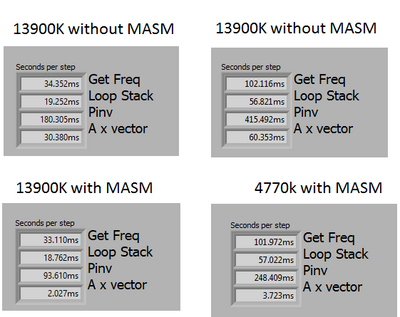

Yes my original question was why the performance improvement from 4770k to 13900K was so limited. And that still remains. But anyway, I also appreciate very much any tips to make the code more efficient. Trying to learn from there.

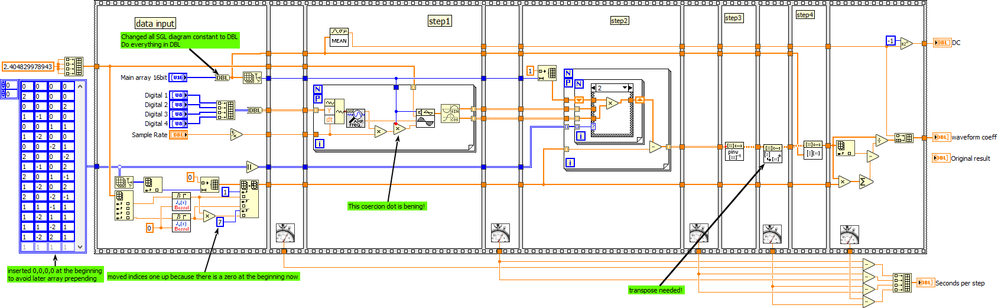

Yes, is is always wrong to just throw more expensive hardware at inefficient code and I think your real issue was always just to improve the code. 😄 Since most of the time is spent in the PINV, you don't really have much slack to tighten elsewhere, but here's a cleaned up version of your non-MASM code to give you some ideas.

Note that this is a near "literal" cleanup. If you would tell us the details of the overall algorithm, maybe it could be done very differently and faster.

09-08-2023 11:31 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Thank you very much again.

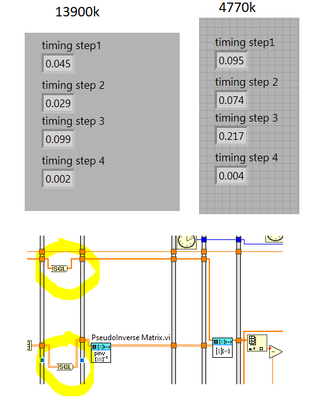

I have been doing more trials. As you said, the best is to set everything to double at the beginning. But for the last two steps I get an important speed up (with MASM) if I switch everything to SGL, and I do the pseudoinverse and vector matrix calculation in single

09-08-2023 11:37 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@obarriel wrote:Yes my original question was why the performance improvement from 4770k to 13900K was so limited. And that still remains. But anyway, I also appreciate very much any tips to make the code more efficient. Trying to learn from there.

@Yamaeda post makes it very plausible that memory is a bottleneck.

That's where you'll get most benefits: preventing copies (incl. dbl<->sgl conversion).

There are 'rule of thump' lists for expected performance gain is (making this up completely, but there are lists like this):

0 to 10X syntax tweaking

0 to 100X memory tweaking

0 to 10000X for algorithm changes

Of course any\all might be optimal at any point in time.

09-08-2023 11:48 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@obarriel wrote:

Thank you very much again.

I have been doing more trials. As you said, the best is to set everything to double at the beginning. But for the last two steps I get an important speed up (with MASM) if I switch everything to SGL, and I do the pseudoinverse and vector matrix calculation in single

If the code before this didn't change, you're better off converting to dbl before concatenating those arrays of dbls.

Building (concatenating) and array will always require a copy (well, maybe not if an element is empty). Building an array of dbls and then converting to sgl will be slower than converting 2 arrays to sgl and then concatenating.

You might consider showing us the hole picture...

09-08-2023 11:49 AM - edited 09-08-2023 12:02 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Yes, since your data is only 16bit, SGL is sufficient. I think all MASM linear algebra works fine with SGL (example, but not the stock linear algebra, though), so that's what you should be using. The main problem was the constant bouncing between representations as well as the array resizings.

And yes, if you can do SGL, twice as much data can fit in the CPU cache, for example.

09-08-2023 11:59 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I share here the version from CA modified to use the MASM toolkit

Maybe as a summary I include this comparison:

09-08-2023 12:00 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

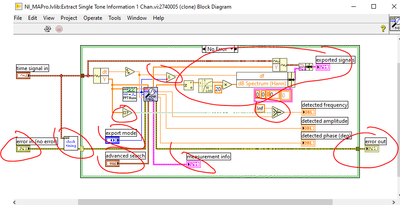

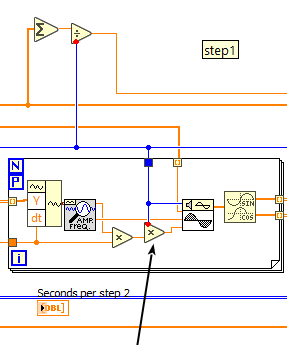

If you're really going for 'as fast as possible', save a copy of this VI and strip what you don't need:

That tone VI in there can probably be reduced. That code is, well, :"special"...

It does output (create) arrays, that you don't use. It's not the most time consuming, but it's wasteful.

09-08-2023 12:31 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Ok, I know it's kinda bad form to quote myself, but I'm genuinely curious to get a response from the OP about something I brought up earlier today:

@Kevin_Price wrote:

I notice in step 1 that you're generating a sine wave pattern that might contain a lot of redundant information. For example, if the "Main array 16bit" is sized at ~1M, and your detected frequency is maybe 1/100 of the sample rate, then you'd be generating 10k cycles worth of sine wave.

If so, then what exactly do you learn by doing all that downstream processing on 10k cycles worth of this generated sine that you wouldn't learn by processing a much smaller # of cycles, perhaps as small as 1?

If the 'cycles' input to the sine pattern generator is often >>1, this seems like a prime candidate to consider for speeding things up dramatically. It doesn't speak to the CPU differences, but perhaps it could make the point moot?

The size of that sine wave array seems to drive almost all the downstream processing. So I went back and dug up the sample code you posted and that array contains 160k sine wave cycles! Zoomed in, it isn't very clean looking with a little less than 4 samples per cycle, so *maybe* (?) you learn a bit more from 10 cycles than 1. But I don't understand what you gain from the remaining 99.9%+.

If you could cut your data size down by a factor of 1000, THAT would be a much bigger win than the other tweaks we're discussing.

So am I missing something? Is there a reason you can't do that?

-Kevin P

09-08-2023 04:48 PM - edited 09-08-2023 04:54 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

The Mean VI is slow, replace with native functions for a modest speed up, I went from 82 ->72 ms.

EDIT: The speed up may be smaller after running a few times. The first run was slow.

- « Previous

- Next »