- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Interpolate Pixel

04-19-2024 09:59 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

It's still not clear to me what the goal is.

The original VI returned a single interpolated pixel.

The focus now seems to be on interpolating an entire image.

There are overlapping use cases, but also very different use cases.

04-19-2024 10:13 AM - edited 04-19-2024 10:18 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

wiebe@CARYA wrote:

It's still not clear to me what the goal is.

The original VI returned a single interpolated pixel.

The focus now seems to be on interpolating an entire image.

There are overlapping use cases, but also very different use cases.

The subVI interpolates exactly one pixel as in the original question, but that would be relatively hard to test because it is so fast and an isolated first call possibly has more overhead.

The outer shell code is just a benchmark harness to see how it performs on numerous pixels, i.e. repeated calls differing in inputs. It also verifies that the result looks approximately correct.

(But yes, to expand an entire picture, there are also other possibilities, of course)

04-19-2024 05:27 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

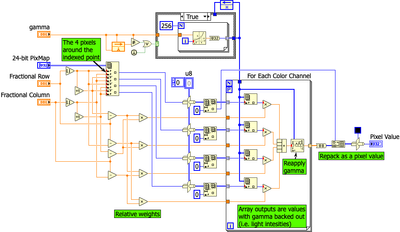

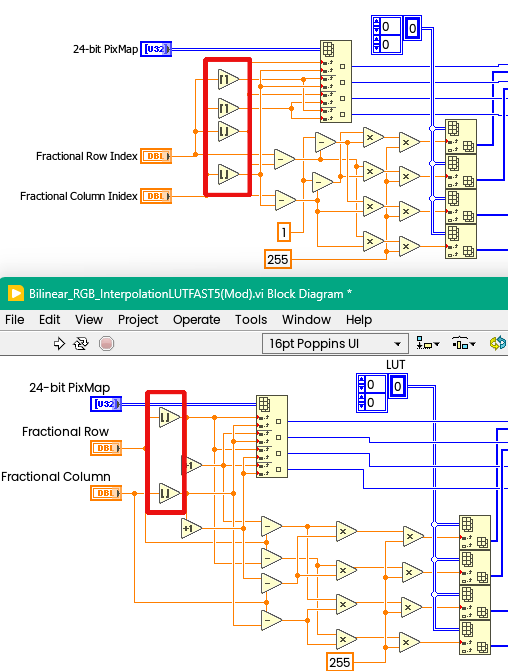

Here's where I currently stand:

I still need to figure a reasonable way to replace those multiplies with LUTs, but I'm tired and I'll think about it later

04-21-2024 08:37 AM - edited 04-21-2024 09:04 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@paul_a_cardinale wrote:

Here's where I currently stand:

Very well, and thanks for sharing! The "good" point is that now you have a little bit more computations in your code, so it makes more sense to try to port this to a DLL and check how performance can be improved (if possible).

This comment is the second part of the answer to the question raised above: "Why would wrapping code in a DLL make it faster?"

It will be a long "weekend" comment as usual, but please read it thoroughly.

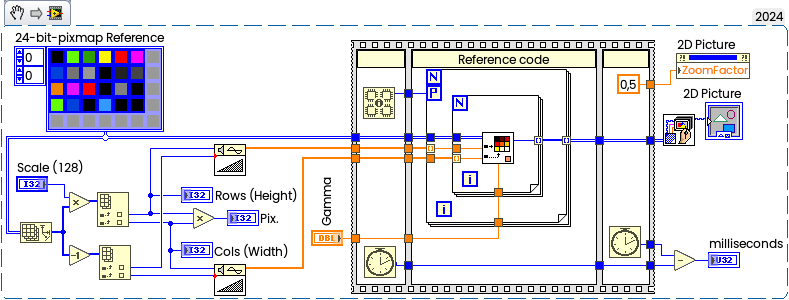

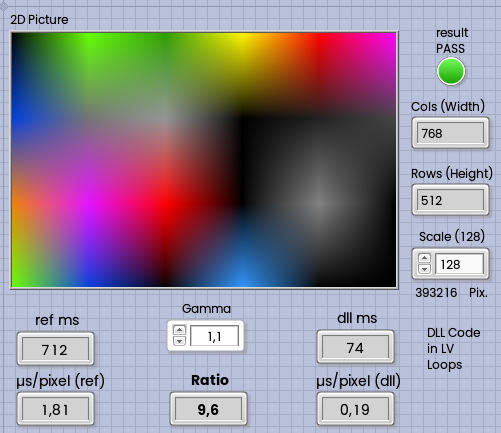

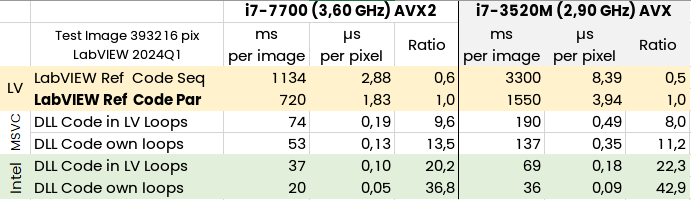

First, let's check where we are at all. I will use the following benchmark from one of previous posts in this thread, slightly modified to get a destination image of 768x512 pixels, resulting 393216 iterations in test loops:

On my old i7-7700 it takes around 710-770 ms.

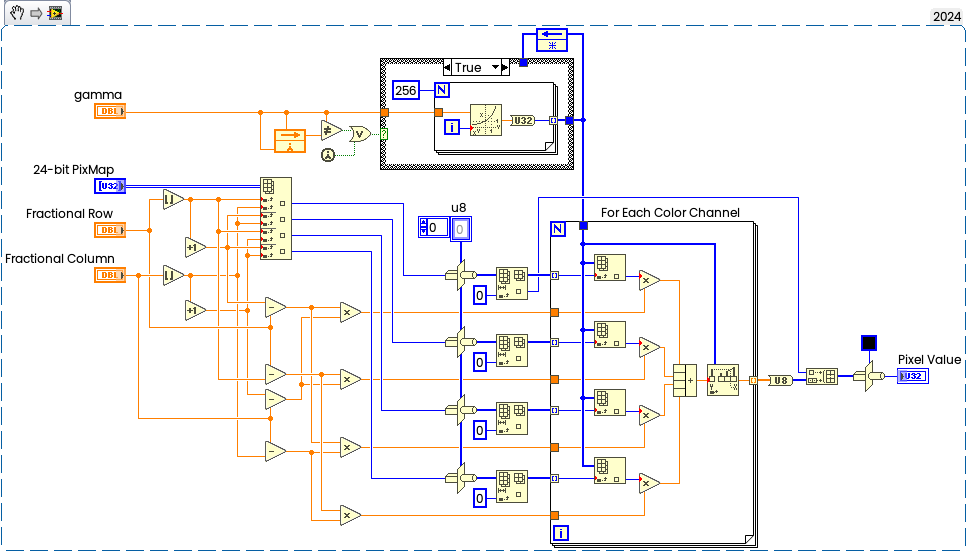

The only difference I'll make in the original code is to turn off parallelization on the "RGB loop", because when the outer loop is already parallelized for all CPUs, then nested loop parallelization usually will slow down execution a little bit (you can check this).So this is the code under experiment:

Well, now I will rewrite it to pure C without any significant changes, here is the code:

IP2D_API UINT fnIP2D(iTDHdl arr, double fCol, double fRow, double gamma)

{

uint32_t* pix = (*arr)->elt; //address to the pixels

int cols = (*arr)->dimSizes[1]; //amount of columns (image width)

// Recompute Gamma if needed:

if (gamma != Gamma) fnLUT(gamma); Gamma = gamma; //Like ShiftReg in LV

//Relative Weights

double relW0 = ((floor(fRow) + 1 - fRow)) * (floor(fCol) + 1 - fCol);

double relW1 = ((fRow)-(floor(fRow))) * (floor(fCol) + 1 - fCol);

double relW2 = ((floor(fRow) + 1 - fRow)) * (fCol - (floor(fCol)));

double relW3 = ((fRow)-(floor(fRow))) * (fCol - (floor(fCol)));

//Four Pixels:

BYTE* p0 = getpXY(pix, cols, rint(floor(fRow)), rint(floor(fCol)));

BYTE* p1 = getpXY(pix, cols, rint(floor(fRow) + 1), rint(floor(fCol)));

BYTE* p2 = getpXY(pix, cols, rint(floor(fRow)), rint(floor(fCol) + 1));

BYTE* p3 = getpXY(pix, cols, rint(floor(fRow) + 1), rint(floor(fCol) + 1));

//Target color:

BYTE bgr[3] = { 0,0,0 };

//#pragma omp parallel for num_threads(3) //<-slow down around twice

for (int i = 0; i < 3; i++) {

double res = 0.0;

double sum = LUT[p0[i]] * relW0 +

LUT[p1[i]] * relW1 +

LUT[p2[i]] * relW2 +

LUT[p3[i]] * relW3;

UINT pos = binarySearch(LUT, sum); //Threshold 1D Array analog

if (pos--) res = pos + (sum - LUT[pos]) / (LUT[pos + 1] - LUT[pos]);

bgr[i] = (int)rint(res); // fractional index

} //RGB loop

return (bgr[0] | bgr[1] << 8 | bgr[2] << 16);

}

Almost a '1:1' translation, the only small thing — to get a similar analog of the 'Threshold 1D Array', I'm using a classical binary search, which is slightly faster than walking over 256 elements. The full source is in the attachment. Sorry for the slightly 'messy' code, it was written in the car while waiting for my family, but it works, at least. The most complicated thing was to get exactly the same rounding as programmed in LabVIEW, so the results after floating-point arithmetic will be exactly (not approximately) the same in LabVIEW and in the DLL. This is not always possible, because for example, we don't know in which order the addition happened (and floating point addition is definitely not associative), but I was lucky and haven't found any differences so far (except the cases with extremely high or low gamma values).

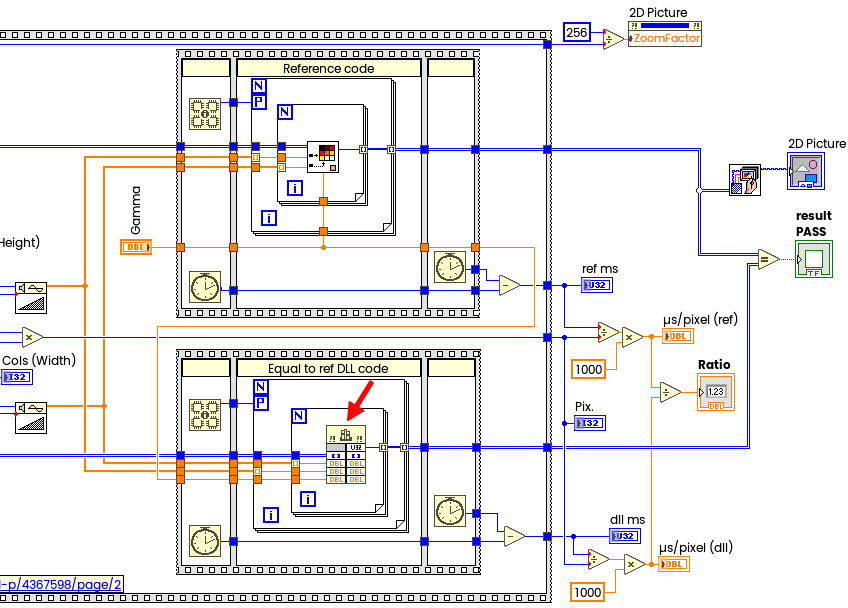

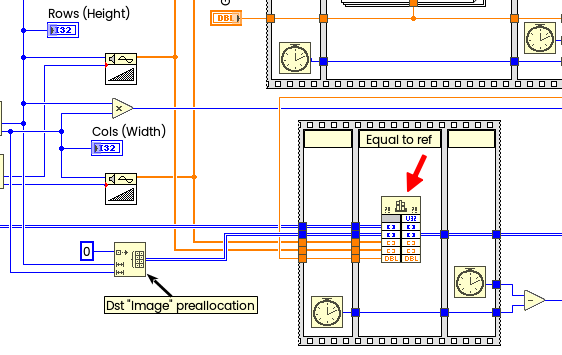

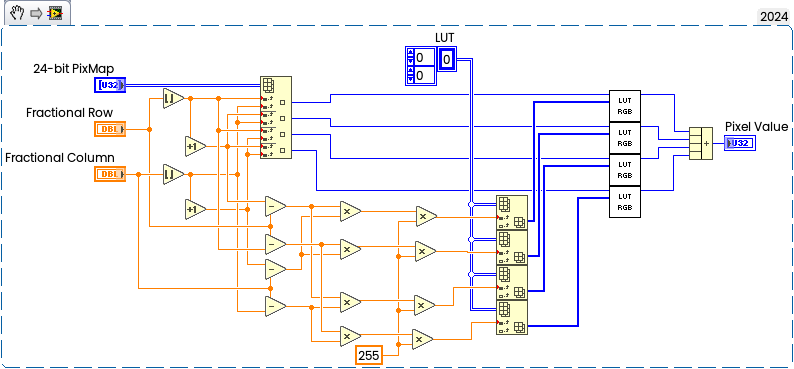

This is how this code will be called from LabVIEW in two nested for-loops (the image is passed to the DLL as a native LabVIEW array - Adapt to Type/Handles by Value, the rest is trivial) and result strictly compared to original:

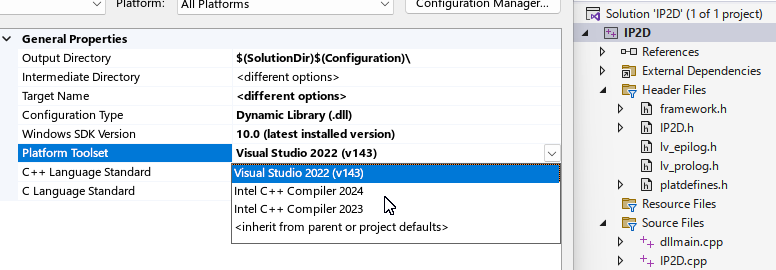

To compile this DLL I'll use Microsoft Visual Studio Professional 2022 v.17.9.6. Not a CVI this time, because the NI CVI is not so good unfortunately in term of performance.

Now result:

Exactly the same result, but around 10 times faster.

Well, what else we can do? Obviously we can put both for-loops into DLL, then using OpenMP for parallelization (I can call LabVIEW Memory Manager from inside DLL, but much easier to make external array allocation):

The source code is simple:

IP2D_API void fnIP2D2(iTDHdl arrSrc, iTDHdl arrDst, fTDHdl fCol, fTDHdl fRow, double gamma)

{

int Ncols = (*fCol)->dimSizes[0];

int Nrows = (*fRow)->dimSizes[0];

double* fColPtr = (*fCol)->elt;

double* fRowPtr = (*fRow)->elt;

unsigned int* DstPtr = (*arrDst)->elt;

#pragma omp parallel for num_threads(4)

for (int x = 0; x < Ncols; x++) {

for (int y = 0; y < Nrows; y++) {

DstPtr[x + y * Ncols] = fnIP2D(arrSrc, fColPtr[x], fRowPtr[y], gamma);

}

}

}

I have fixed four threads, because 4 core CPU (feel free to modify if needed).

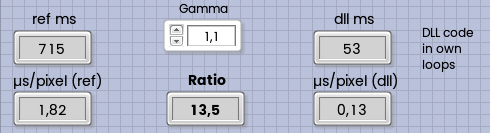

Now its more than 13x times faster than original LabVIEW code:

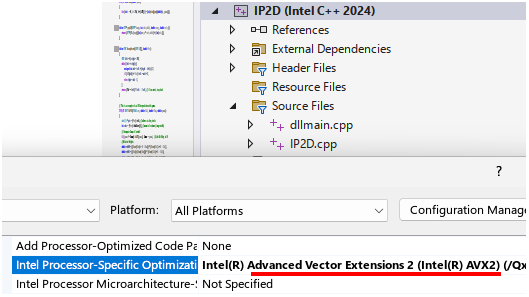

Not so bad, but there are some reserves for performance tuning. Theoretically, we can check the assembler listing and probably find that some operations can be vectorized with AVX, then analyze and rewrite "bottlenecks" with AVX/AVX2 or may be FMA. This will be hard work, but the easiest way is to take the Intel OneAPI C++ Compiler and just recompile this code. So, I will simply switch from Visual Studio 2022 to Intel C++ 2024 (which is preinstalled on my PC, of course):

and in additional, I'll turn on AVX2 Optimization in Project's Properties:

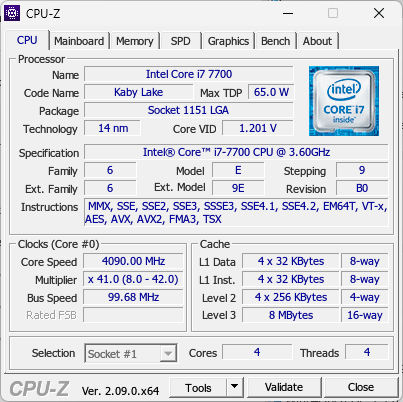

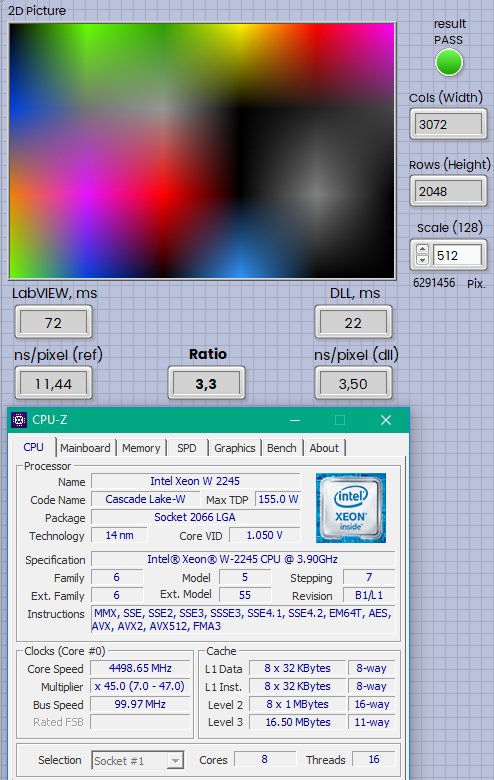

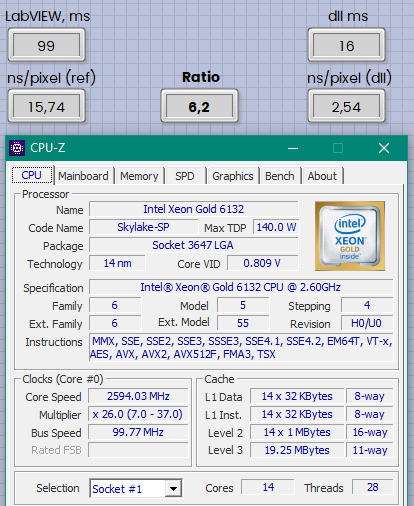

You can always check which insrtructions set supported with CPU-z or similar software. This is my CPU:

And now, after recompiltion with Intel +AVX2 optimization repeat the benchmarking.

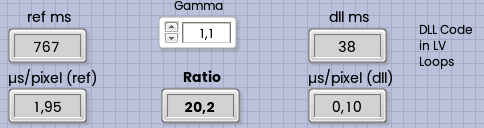

Version "With LabVIEW loops":

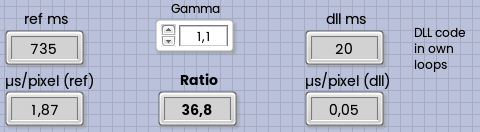

Version "without LabVIEW loops":

So, from 750 milliseconds the execution time was improved and now around 20 milliseconds only.

I checked this on two PCs at home, and my ancient laptop is most impressive - over 40x times improvement:

If you try, then depending on the CPU, you might get slightly different results. Some performance deviation may be present from run to run, but a high-precision benchmark is out of scope. The full source code and benchmarks are in the attachment. The LabVIEW code was tested in LabVIEW 2024 and downsaved to LV2018. The DLLs are available in both 32-bit and 64-bit (the 32-bit version wasn't tested thoroughly, but it should work). The DLLs were compiled in MSVC (and you'll need the latest Microsoft Visual C++ Redistributable, usually already installed on Windows PC), and the Intel DLLs are also included, both for AVX and AVX2. There was an option to build a 'universal' DLL, but I forgot.If you trust me and would like to run this code with the Intel DLLs, compiled by me, then copy both from the Release-Intel folder to the root folder and install the latest Intel oneAPI DPC++/C++ Compiler Runtime for Windows, or recompile, of course. Unfortunately, I don't have much time to 'polish' everything, but the overall idea is more or less clear (I hope) — in some cases, you can really get faster execution by wrapping the code into a DLL. Minor bugs could exist above, so use it at your own risk.

Andrey.

04-21-2024 12:07 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Wow, you put a lot of work into this and I'll have a more detailed look later.

Here are a few initial comments:

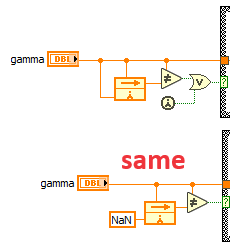

In you would init the FN with NaN, you could eliminate the "first call?" primitive and the OR. (since gamma is never zero we might even just leave the initializer disconnected)

If gamma=1, your code is off by up to 2 compared to the original (and my non-gamma) code. I think you need to tweak to match.

I still don't understand the song and dance with the gamma. Without it, my code is 10x faster than your dll. I thought speed was most important. If gamma is close to 1, the results look the same. And if gamma is significantly different, the result looks very ugly.

04-21-2024 02:54 PM - edited 04-21-2024 03:15 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@altenbach wrote:

Wow, you put a lot of work into this and I'll have a more detailed look later.

Thank you, Christian, nothing special here, I just recreated LabVIEW code with pure C, nothing more.

@altenbach wrote:

In you would init the FN with NaN, you could eliminate the "first call?" primitive and the OR. (since gamma is never zero we might even just leave the initializer disconnected)

Yes, you're right, but this was original code from author, I just leave it "as is", the only performance was in scope.

@altenbach wrote:

If gamma=1, your code is off by up to 2 compared to the original (and my non-gamma) code. I think you need to tweak to match.

I still don't understand the song and dance with the gamma. Without it, my code is 10x faster than your dll. I thought speed was most important.

Fully agree with you. And with gamma it makes more sence to port to DLL, but without gamma we can try as well.

Let's try to do it — I will remove gamma completely and rewrite the DLL as shown below:

// This is an example of an 2D Interpolation without gamma.

IP2D_API UINT fnIP2D(iTDHdl arr, double fCol, double fRow)

{

uint32_t* pix = (*arr)->elt; //address to the pixels

int cols = (*arr)->dimSizes[1]; //amount of columns (image width)

//Relative Weights to indexes

UINT i0 = rint(((floor(fRow) + 1 - fRow)) * (floor(fCol) + 1 - fCol) * 255.0);

UINT i1 = rint(((fRow)-(floor(fRow))) * (floor(fCol) + 1 - fCol) * 255.0);

UINT i2 = rint(((floor(fRow) + 1 - fRow)) * (fCol - (floor(fCol))) * 255.0);

UINT i3 = rint(((fRow)-(floor(fRow))) * (fCol - (floor(fCol))) * 255.0);

//Four Pixels:

UINT p0 = getpXY(pix, cols, rint(floor(fRow)), rint(floor(fCol)));

UINT p1 = getpXY(pix, cols, rint(floor(fRow) + 1), rint(floor(fCol)));

UINT p2 = getpXY(pix, cols, rint(floor(fRow)), rint(floor(fCol) + 1));

UINT p3 = getpXY(pix, cols, rint(floor(fRow) + 1), rint(floor(fCol) + 1));

p0 = LUT_RGB(p0, &(LUT[i0 << 8]));

p1 = LUT_RGB(p1, &(LUT[i1 << 8]));

p2 = LUT_RGB(p2, &(LUT[i2 << 8]));

p3 = LUT_RGB(p3, &(LUT[i3 << 8]));

return (p0 + p1 + p2 + p3);

}

This code matched your code I recreated like this (hopefullly correct):

Complete Code:

// Header

#ifdef IP2D_EXPORTS

#define IP2D_API extern "C" __declspec(dllexport)

#else

#define IP2D_API __declspec(dllimport)

#endif

// lv_prolog.h and lv_epilog.h set up the correct alignment for LabVIEW data.

#include "lv_prolog.h"

// Typedefs

typedef struct {

int32_t dimSizes[1];

double elt[1];

} fTD;

typedef fTD** fTDHdl;

typedef struct {

int32_t dimSizes[2];

uint32_t elt[1];

} iTD;

typedef iTD** iTDHdl;

#include "lv_epilog.h"

IP2D_API unsigned int fnIP2D(iTDHdl arr, double fCol, double fRow);

IP2D_API void fnIP2D2(iTDHdl arrSrc, iTDHdl arrDst, fTDHdl fCol, fTDHdl fRow);

IP2D_API void fnLoadLUT(BYTE* ptr, size_t size);

// Source:

#include "framework.h"

#include "IP2D.h"

static BYTE LUT[256*256]; // Not thread-safe

IP2D_API void fnLoadLUT(BYTE* ptr, size_t size)

{

memcpy(LUT, ptr, size * sizeof(BYTE));

}

inline UINT getpXY(UINT* image, int cols_width, double row_y, double col_x) {

return (image[((int)row_y * cols_width) + (int)col_x]);

}

inline UINT LUT_RGB(UINT ColorIn, BYTE* lutRow) {

BYTE b1 = lutRow[ColorIn & 0xFF];

BYTE b2 = lutRow[(ColorIn & 0xFF00) >> 8];

BYTE b3 = lutRow[(ColorIn & 0xFF0000) >> 16];

return (b1 | b2 << 8 | b3 << 16);

}

// This is an example of an 2D Interpolation without gamma.

IP2D_API UINT fnIP2D(iTDHdl arr, double fCol, double fRow)

{

uint32_t* pix = (*arr)->elt; //address to the pixels

int cols = (*arr)->dimSizes[1]; //amount of columns (image width)

//Relative Weights to indexes

UINT i0 = rint(((floor(fRow) + 1 - fRow)) * (floor(fCol) + 1 - fCol) * 255.0);

UINT i1 = rint(((fRow)-(floor(fRow))) * (floor(fCol) + 1 - fCol) * 255.0);

UINT i2 = rint(((floor(fRow) + 1 - fRow)) * (fCol - (floor(fCol))) * 255.0);

UINT i3 = rint(((fRow)-(floor(fRow))) * (fCol - (floor(fCol))) * 255.0);

//Four Pixels:

UINT p0 = getpXY(pix, cols, rint(floor(fRow)), rint(floor(fCol)));

UINT p1 = getpXY(pix, cols, rint(floor(fRow) + 1), rint(floor(fCol)));

UINT p2 = getpXY(pix, cols, rint(floor(fRow)), rint(floor(fCol) + 1));

UINT p3 = getpXY(pix, cols, rint(floor(fRow) + 1), rint(floor(fCol) + 1));

p0 = LUT_RGB(p0, &(LUT[i0 << 8]));

p1 = LUT_RGB(p1, &(LUT[i1 << 8]));

p2 = LUT_RGB(p2, &(LUT[i2 << 8]));

p3 = LUT_RGB(p3, &(LUT[i3 << 8]));

return (p0 + p1 + p2 + p3);

}

// same as above with Rows/Cols cycles

IP2D_API void fnIP2D2(iTDHdl arrSrc, iTDHdl arrDst, fTDHdl fCol, fTDHdl fRow)

{

int Ncols = (*fCol)->dimSizes[0];

int Nrows = (*fRow)->dimSizes[0];

double* fColPtr = (*fCol)->elt;

double* fRowPtr = (*fRow)->elt;

unsigned int* DstPtr = (*arrDst)->elt;

#pragma omp parallel for num_threads(4)

for (int x = 0; x < Ncols; x++) {

for (int y = 0; y < Nrows; y++) {

DstPtr[x + y * Ncols] = fnIP2D(arrSrc, fColPtr[x], fRowPtr[y]);

}

}

}

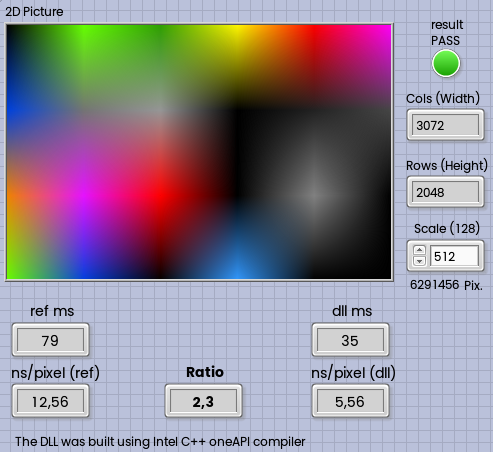

Now compiled this DLL with Intel Compiler with and without AVX2 support.

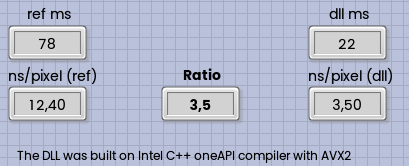

Without AVX2, the DLL-based version is roughly twice as fast as LabVIEW:

(I increased the size to 3072x2048 to have 6M iterations)

with enabled AVX2 flag — running more than three times, execution time was dropped from 78 to 22 milliseconds:

There is no "silver bullet", but in some particular cases we can improve performance by better CPU utilization. However, this usually makes sense for a large amount of computation. With a very small amount, we can easily reach the memory's bandwidth limit, and then there will be no difference.

In the past, I played with AVX512, which is also a very specific instruction set - here we have 512-bit registers (so I can perform computation on 32 16-bit grayscale pixels in one single command). But under high load, the CPU runs hot (just because too many transistors are switching at the same time), then goes into throttling, and then there is not much difference in comparison to AVX2.

One good point is that modern C compilers are smart enough to perform automated vectorization, loop unrolling, etc., and not as much "hand work" is required as before (like 25 years ago when we were able to get better performance just by swapping assembly instructions for better pipelines utilization or performing some memory prefetching). And optimization should be meaningful - I mean there are usually just a few "bottleneck" places, it's not necessary to optimize everything. And very often, after aggressive optimization, the code is not as "readable" as it was before.

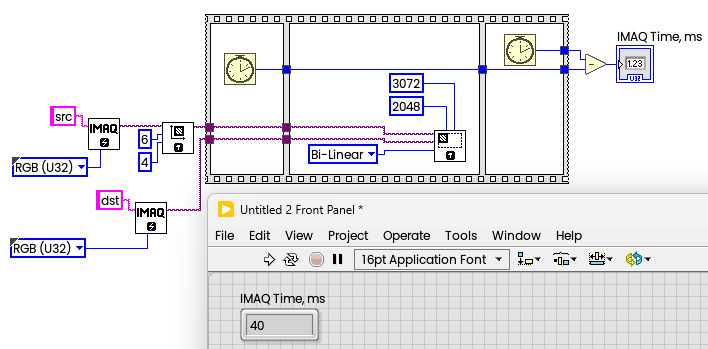

By the way, I just checked how much time IMAQ Vision need to resize 6x4 RGB image to 3072x2048 using bilinear interpolation, it is 40 ms:

And to have 80 ms with pure LabVIEW code, is really not so bad. LabVIEW is amazing!

Andrey.

PS

Please note — to open and run the LabVIEW code attached to this message the Intel oneAPI DPC++/C++ Compiler Runtime for Windows needs to be downloaded and installed. Direct link to version 2024.1.0.

04-21-2024 05:52 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

For reference, here's my code. Have not tested on your newest version. I am sure if could be tweaked further...

04-21-2024 11:55 PM - edited 04-22-2024 12:19 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@altenbach wrote:

For reference, here's my code. Have not tested on your newest version. I am sure if could be tweaked further...

Thank you! Its same, but a little bit slower, probably because you have four roundings:

But the difference not significant, may be few percents 229 ms vs 242 ms on my laptop (benchmark in attachment):

From this point of view I also changed DLL to reduce amout of repeatable computations (the compiler can optimize lot, but not all):

// This is an example of an 2D Interpolation without gamma.

IP2D_API UINT fnIP2D(iTDHdl arr, double fCol, double fRow)

{

uint32_t* pix = (*arr)->elt; //address to the pixels

int cols = (*arr)->dimSizes[1]; //amount of columns (image width)

//Relative Weights to indexes

double ffRow = floor(fRow);

double ffCol = floor(fCol);

UINT i0 = rint((ffRow + 1 - fRow) * (ffCol + 1 - fCol) * 255.0);

UINT i1 = rint((fRow-ffRow) * (ffCol + 1 - fCol) * 255.0);

UINT i2 = rint((ffRow + 1 - fRow) * (fCol - ffCol) * 255.0);

UINT i3 = rint((fRow-ffRow) * (fCol - ffCol) * 255.0);

//Four Pixels:

int iRow = rint(ffRow);

int iCol = rint(ffCol);

UINT p0 = getpXY(pix, cols, iRow, iCol);

UINT p1 = getpXY(pix, cols, iRow + 1, iCol);

UINT p2 = getpXY(pix, cols, iRow, iCol + 1);

UINT p3 = getpXY(pix, cols, iRow + 1, iCol + 1);

p0 = LUT_RGB(p0, &(LUT[i0 << 8]));

p1 = LUT_RGB(p1, &(LUT[i1 << 8]));

p2 = LUT_RGB(p2, &(LUT[i2 << 8]));

p3 = LUT_RGB(p3, &(LUT[i3 << 8]));

return (p0 + p1 + p2 + p3);

}

Now its a little bit faster on my Laptop i7-3520M:

on Desktop i7-7700:

The changed code in the attachment as well.

04-22-2024 01:10 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

(posting by phone, cannot look at code)

I don't know if an increment or rounding is faster, but overall we are pretty close to the max speed, I think.

It probably does not make a difference here, but the actual four indices used might differ if we are exactly on an integer.

04-22-2024 01:30 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@altenbach wrote:

(posting by phone, cannot look at code)

I don't know if an increment or rounding is faster, but overall we are pretty close to the max speed, I think.

It probably does not make a difference here, but the actual four indices used might differ if we are exactly on an integer.

Yes, definitively. Now I tested this (code from previous comment) on two relative powerful Xeon PCs:

Here on W2245 the DLL is faster by factor 3:

And on Gold 6132 by factor 6: