- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- « Previous

-

- 1

- 2

- Next »

Is LabVIEW a Time Machine?

Solved!04-11-2022 03:36 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@altenbach wrote:

(I still suspect a coding error even if you don't believe it :D)

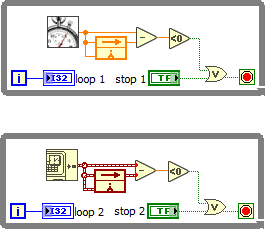

Properly coded, I have yet to ever see any negative dt. For example the following two code examples will loop "forever". Agreed?

04-11-2022 04:27 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

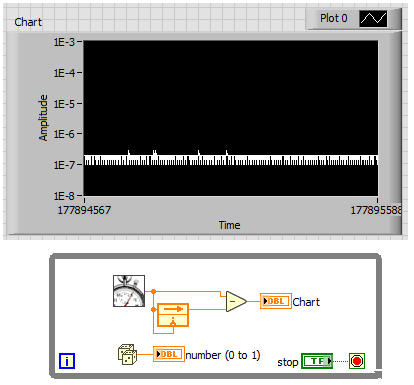

Thank You 'altenbach' and ALL others, The get time and date stamp has a large integer portion as fractional elapsed days since Feb 1, 1904 (I seem to recall) for LabVIEW. I think it also runs on the PC "clock", not a processor speed linked tick count? So, get time and date stamp looses resolution even as a DBL cluster? LabVIEW is NOT a time machine but can look so if not careful. I've re-coded to use that High Resolution Relative Seconds VI thing and seem to have no time errors, yet. I think that vi uses tick count and allows for tick count 'wrapping' if tick count exceeds... Block diagram 'pass worded' but clear what it does. For tick count:

"The base reference time (millisecond zero) is undefined. That is, you cannot convert millisecond timer value to a real-world time or date. Be careful when you use this function in comparisons because the value of the millisecond timer wraps from (2^32)–1 to 0"

We cannot stop code if a time error as some runs are multiple weeks, at a low logging frequency. Testing short and long term performance of trace toxic and flammable gas sensors then calibrating them after a week long run at multiple ppb concentrations, T, %RH and P... Our PhD Physicist, and a GREAT Fiend of mine, has non-linear models to fit that data and generate 4 dimensional calibration coefficient equations for later EPROM write... I need to make this dance for him, or her.

Using "High Resolution Relative Seconds VI" and NOT logging timestamp seems to work. Testing overnight. Now, I use get time and date stamp only to generate new data file names to prevent confusion and make them unique. Yeah, also add the linked COM Port to auto generated file names...

Thank you all for your tips. After an overnight run I'll post results.

Warm Regards All,

Saturn 233207

AKA Lloyd Ploense, Sr ChE, Sr Automation Engineer etc...

04-11-2022 04:30 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Yes, I think windows made the .net call for datetime monotonic now with this nice note:

The resolution of this property depends on the system timer, which depends on the underlying operating system. It tends to be between 0.5 and 15 milliseconds. As a result, repeated calls to the Now property in a short time interval, such as in a loop, may return the same value.

https://docs.microsoft.com/en-us/dotnet/api/system.datetime.now?view=net-6.0

Have a pleasant day and be sure to learn Python for success and prosperity.

04-11-2022 06:03 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

The LabVIEW Timstamp is a 128 bit fixed point value with 64 bit signed integer and 64 bit fractional part. However only the most significant 32 bit of the fractional part are used as it is already enough to represent a resolution of less than half a nanosecond.

It’s value is determined from a call to a Windows API function returning a QUAD integer (64 bit) which is 100 ns intervals since January 1, 1600. UTC. This is the absolute time value that is subject to skew from time synchronization adjustment.

The ms tick counter is free running, never adjusted but will roll over after 2^32 ms. The HR timer is also free running and is never adjusted and will also roll over at some point but has typically a longer period as it uses a 64 bit integer but has also a much higher resolution.

Note that while on most modern CPUs the HR timers are directly based on CPU TSC counters and very efficient but on some hardware has to fallback on other counters that are much more costly to query.

04-11-2022 06:17 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@Saturn233207 wrote:

I've re-coded to use that High Resolution Relative Seconds VI thing and seem to have no time errors, yet. I think that vi uses tick count and allows for tick count 'wrapping' if tick count exceeds... Block diagram 'pass worded' but clear what it does.

No, tick count has only integer ms resolution while with the "high resolution relative seconds", you can time well below microseconds easily. Many orders of magnitude better!

04-12-2022 01:57 AM - edited 04-12-2022 02:04 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@Saturn233207 wrote:

I've re-coded to use that High Resolution Relative Seconds VI thing and seem to have no time errors, yet. I think that vi uses tick count and allows for tick count 'wrapping' if tick count exceeds...

It uses the QueryPerformanceCounter() API (QPC) to do its magic and is similar to the tick count but has a much higher resolution. That resolution is however depending on the CPU (and if the Windows kernel decided that the CPU TSC resource is not suitable it uses other measures such as the ACPI timer to do its work). It is in principle simply a counter with an arbitrary interval that can and almost certainly will be different between computers, unless they are really exactly the same hardware, and other functions from the QueryPerformanceCounter() API group allow to retrieve information about that interval, such that it is possible to scale the difference between two readings to a real world time scale such as nano or micro seconds or a frequency. Not sure why the VIs are password protected, usually NI does that if they call into LabVIEW exported functions rather than directly to the Windows API, so it may be that the actual logic to the the proper QPC handling is actually present in the LabVIEW runtime and that would make sense, since they probably use some of that logic also for the timing of the Timed Loop to get it a bit more accurate than only several milliseconds.

But that doesn't mean that the timed loop can guarantee ms intervals. It is running on Windows, which is not a real-time system and therefore it is very possible that a process does not get any CPU time for many milliseconds and sometimes even seconds and if LabVIEW doesn't get CPU time assigned by the OS it can't do anything about that.

04-12-2022 07:46 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

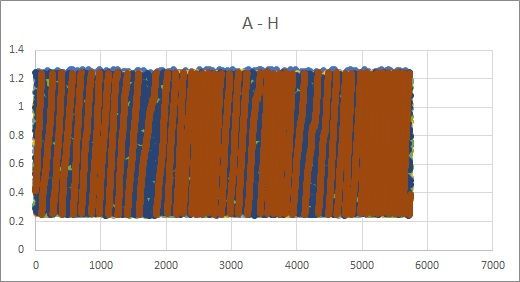

We don't really need a real time system as RS232 Serial at 9,600 Baud. After re-coding with "High Resolution Relative Seconds VI" I see ZERO time aberrations across 5,737 readings...

However, this means we cannot use time stamps in our files unless perhaps we increment an initial one using "High Resolution Relative Seconds VI" delta t. Elapsed time is good enough for now I think.

Thank You All Again!

Warm Regards, Saturn233207

04-12-2022 08:46 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

We still don't know the units of your Y axis. seconds? milliseconds, microseconds?

What does the graph represent?

04-13-2022 11:56 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

The units of the Y axis are seconds.

- « Previous

-

- 1

- 2

- Next »