- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Setting up a call library node for a DLL

Solved!11-28-2019 10:51 AM - edited 11-28-2019 10:52 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Where would you put the Typecast? I have no idea how that would solve the problem in any way. If you mean typecasting the incoming cluster then defintely not! Aside from endianess trouble (all values bigger than 8-Bit will be converted to Big Endian which is definitely the wrong thing for your x86/x64 compiled DLL), there will also be a 32-bit integer prepended to the inlined string which indicates the length of the string. And the resulting array will not be the size of 4 + 4 + 4 + 4 + 8 + 256 bytes but instead 4 + 4 + 4 + 4 + 8 + 4 + n bytes with n being the length of the incoming string, which could be 0.

11-29-2019 02:37 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

So, no typecast of the entire thing, but a type cast of the scalars, and a concatenate of the array. Then a split of the output, a type cast back to the scalars.

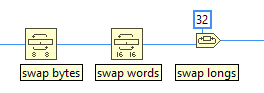

The endianness is pretty simple to solve. Just a swap bytes and a swap words, and optionally (only required if there are 64 bit values, but doesn't hurt if not), a rotate (32 bits). Put into a vim, and it's even reusable...

11-29-2019 06:01 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

wiebe@CARYA wrote:

So, no typecast of the entire thing, but a type cast of the scalars, and a concatenate of the array. Then a split of the output, a type cast back to the scalars.

The endianness is pretty simple to solve. Just a swap bytes and a swap words, and optionally (only required if there are 64 bit values, but doesn't hurt if not), a rotate (32 bits). Put into a vim, and it's even reusable...

You consider that simple? And besides for 64 integers you don't need a 32 bit rotate but a Swap Longs which isn't present as a LabVIEW node so has to be done yourself. It can be done with the Split Number and Join Numbers but still!

If you really want to go this route, then use Flatten To String and Unflatten from String instead for the scalar numbers part of the cluster and use "native, host order" as endianness selection. That is much more maintainable without complicated VIMs.

Just don't forget to pass the stream as byte array pointer rather than a string pointer if you intend to wire the right side of the terminal too. Otherwise the Call Library Node believes there is a C string in the buffer and will only read back until the first 0 byte in the "string", which for a binary data structure will usually happen within the first few bytes already.

11-29-2019 06:16 AM - edited 11-29-2019 06:17 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@rolfk wrote:

wiebe@CARYA wrote:

So, no typecast of the entire thing, but a type cast of the scalars, and a concatenate of the array. Then a split of the output, a type cast back to the scalars.

The endianness is pretty simple to solve. Just a swap bytes and a swap words, and optionally (only required if there are 64 bit values, but doesn't hurt if not), a rotate (32 bits). Put into a vim, and it's even reusable...

You consider that simple? And besides for 64 integers you don't need a 32 bit rotate but a Swap Longs which isn't present as a LabVIEW node so has to be done yourself. It can be done with the Split Number and Join Numbers but still!

A rotate 32 bits effectively swap longs. It does the job pretty consistently, exactly as swap longs would do:

11-29-2019 07:02 AM - edited 11-29-2019 07:05 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I see, now I understand! However, using Flatten is still much more efficient on Little Endian platforms. Instead of having Typecast swap everything to big endian so you then have to swap it back before or afterwards IF you are on a little endian platform (all but the VxWorks PPC platforms nowadays), the Flatten with the "native" byteorder selector simply puts it into the string without any extra Swap and Swap back business!

11-29-2019 07:13 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Why isn't there an option to tell the "adapt to type" configuration how to treat arrays?

It seems to me that in a cluster with arrays, arrays should either be a pointer to the array, or the raw data (not including the size)?

Wouldn't that make the CLFN a lot more compatible with C\C++?

11-29-2019 07:30 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

You could not easily have such an option. The array in the C structure could be either a fixed-length array, in which case it's included in the overall size of the structure, or a pointer to an array in which case the data is stored outside the cluster and might vary in size. And then you'd have to deal with a struct containing an array of structs, each of which might have its own array elements; it would get complicated quickly.

Also, your suggestion would make an important fundamental change to the way LabVIEW passes data to a library function: it would resize the data by converting a LabVIEW array into something else. Right now, the size of a cluster is exactly the size of the corresponding struct; the only way LabVIEW changes the data is to fix endianness if needed.

11-29-2019 07:49 AM - edited 11-29-2019 07:49 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@nathand wrote:

You could not easily have such an option. The array in the C structure could be either a fixed-length array, in which case it's included in the overall size of the structure, or a pointer to an array in which case the data is stored outside the cluster and might vary in size. And then you'd have to deal with a struct containing an array of structs, each of which might have its own array elements; it would get complicated quickly.

Also, your suggestion would make an important fundamental change to the way LabVIEW passes data to a library function: it would resize the data by converting a LabVIEW array into something else. Right now, the size of a cluster is exactly the size of the corresponding struct; the only way LabVIEW changes the data is to fix endianness if needed.

The option could have three values:

+ Pass LabVIEW array (as it is now, and the default)

+ Pass as pointer (always points to the data, IIRC, like passing an array)

+ Pass data (like converting to a cluster, pass the bytes)

I doubt there will be a lot of cases where (nested) clusters contain a mixture of array pointers, LabVIEW arrays and array data.

It's not a fundamental change in how LabVIEW passes the data: simply use the default to pass data like it always did.

@nathand wrote: Right now, the size of a cluster is exactly the size of the corresponding struct; the only way LabVIEW changes the data is to fix endianness if needed.

IIRC, LabVIEW does change the data. The LabVIEW cluster contain pointers to arrays. So, when passing the data to the CLFN, the data is converted to array data including the size..

And it isn't very convenient.

So while LabVIEW changes the data, it might as well change it into how we want it to be.

11-29-2019 07:51 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

wiebe@CARYA wrote:

Why isn't there an option to tell the "adapt to type" configuration how to treat arrays?

It seems to me that in a cluster with arrays, arrays should either be a pointer to the array, or the raw data (not including the size)?

Wouldn't that make the CLFN a lot more compatible with C\C++?

The problem is that there is basically no standard in C at all how to "manage" memory. C has been originally defined purposefully to be so close to the bare metal CPU implementation that it left most things entirely to the implementer of the particular compiler and the programmer writing the code. Who allocates memory when and from where is totally up to the implementer of any particular function. And the C (and even the C++ unless you limit yourself more or less fully to only use standard template class features) syntax has simply not one single feature to indicate that directly when declaring a function interface.

There are conventions that have been found to work better than others and are fairly common nowadays and their intention can often be inferred from the naming syntax of the parameter names, but those names have absolutely no meaning in terms of the C syntax and need to be interpreted by the user of those functions to hopefully mean what she/he thinks it does, by reading the according API description anyhow.

Creating a Call Library Function configuration that would allow to configure all these features would be making the dialog several times more complex than it is now, and for 95% of the users it is already way to complex to use.

@nathand wrote:

Also, your suggestion would make an important fundamental change to the way LabVIEW passes data to a library function: it would resize the data by converting a LabVIEW array into something else. Right now, the size of a cluster is exactly the size of the corresponding struct; the only way LabVIEW changes the data is to fix endianness if needed.

There is absolutely no implicit endianness fixing when passing data to a shared library. That is because the LabVIEW data in memory is always kept in whatever endianness the current platform requires. Endianness only comes into play when you try to convert native data into an external format (byte stream to send wherever including a file). The only functions in LabVIEW that deal with endianness correction are:

- File IO functions when reading or writing binary data (with an endianness selector since LabVIEW 8.0, before it always assumed the flattened side to be big endian)

- A special form of (I believe never officially released) network read and write nodes that accept directly binary data structures like the binary read and write nodes.

- Flatten and Unflatten nodes including their to/from Variant versions (with a endianness selector since LabVIEW 8.0, before it always assumed the flattened side to be big endian)

- Typecast which always will do BigEndian byteorder correction.

11-29-2019 08:16 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Thank you for the endianness explanation. For some reason I've had it in my head that LabVIEW is natively the same endianness on all platforms but it makes more sense that that's only in terms of data that could be coming from another platform.

So endianness issues with DLLs only occur when passing an array of raw bytes, not structured data.